You run an A/B test. Results come in. Now what? Traditional analysis requires statistical expertise, spreadsheet wrangling, and hours of interpretation. By the time you extract insights, you've missed opportunities to launch follow-up tests.

AI transforms experiment analysis from a bottleneck into a rapid feedback loop. This guide provides copy-paste prompts that turn raw test data into actionable insights in under 10 minutes—complete with statistical interpretation, pattern recognition, and recommended next tests.

Why Traditional Test Analysis Is Too Slow

Typical Analysis Process:

- Export data from testing platform (15 min)

- Import to spreadsheet and clean data (30 min)

- Calculate statistical significance manually (20 min)

- Create visualization charts (30 min)

- Interpret results and document findings (45 min)

- Brainstorm follow-up tests (30 min)

- Total: 2.5-3 hours

Problems:

1. Time Lag - By the time you analyze, market conditions have shifted

2. Manual Errors - Copy/paste mistakes and formula errors corrupt data

3. Limited Context - Analysis happens in isolation from broader patterns

4. Single-Threaded - One person can analyze one test at a time

5. Shallow Insights - Focus on "did it win?" instead of "why and what's next?"

AI-Powered Analysis:

- Paste data into single prompt (2 min)

- AI calculates significance, interprets patterns, suggests follow-ups (5 min)

- Review and validate insights (3 min)

- Total: 10 minutes

This 15x speed improvement enables running multiple concurrent experiments—each with rigorous analysis.

The Real Cost of Slow Analysis

Beyond wasted time, slow analysis creates cascading problems:

Real Example: A B2B SaaS client spent 3 hours analyzing a pricing page test. By the time they implemented the winner and launched a follow-up test, 9 days had passed. At 800 trials per week, they lost ~1,000 trial signups during the delay. With a 15% trial-to-paid conversion rate and $2,400 average contract value, the slow analysis cost them $360,000 in delayed revenue.

The Compounding Effect: Testing velocity compounds. If you run 4 tests/month and learn one insight per test, you get 48 insights per year. If you run 16 tests/month, you get 192 insights per year. But those insights build on each other exponentially—each test informs the next, creating a compounding knowledge advantage over competitors still running 4 tests/month.

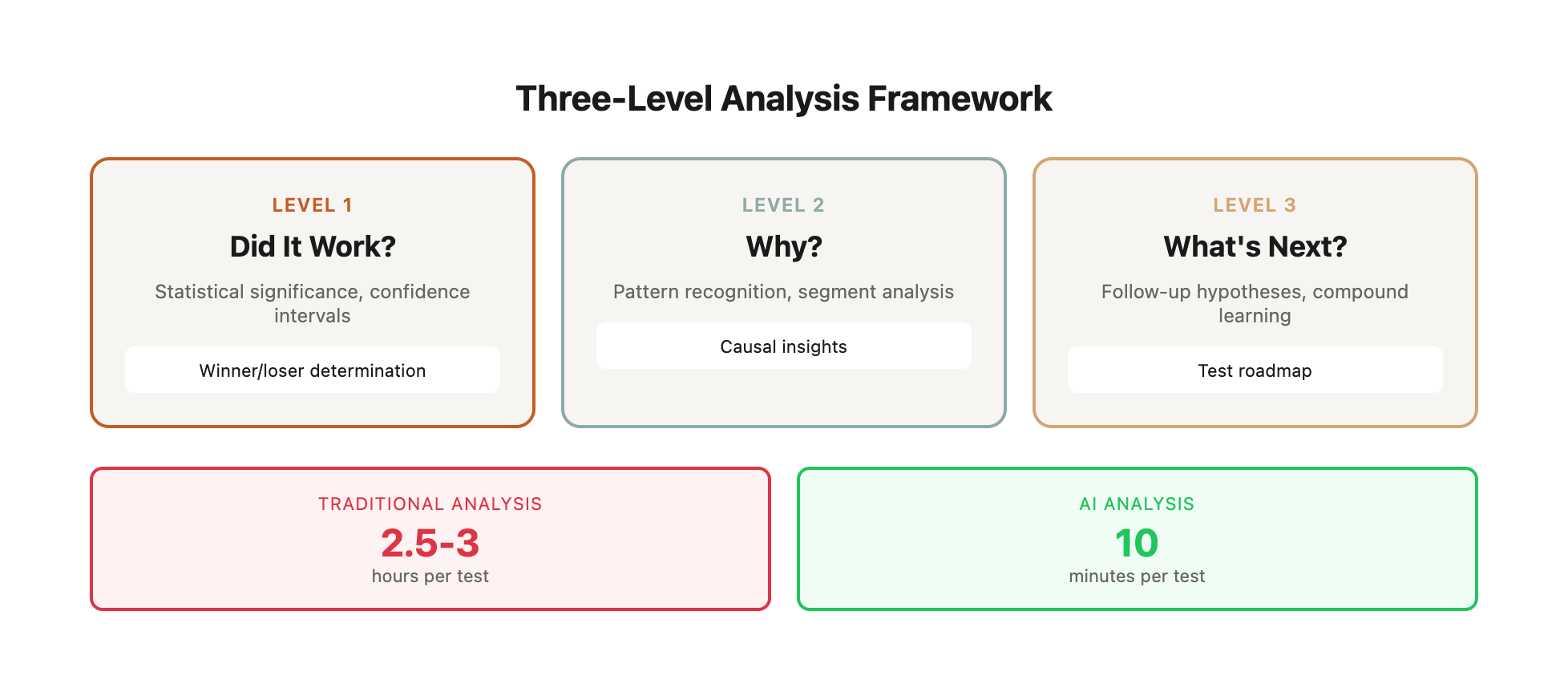

The Three-Level Analysis Framework

AI analysis should answer three questions:

Level 1: Did It Work?

- Statistical significance

- Effect size (magnitude of change)

- Confidence in results

Level 2: Why Did It Work (or Fail)?

- Segment performance differences

- Secondary metric impacts

- Behavioral pattern changes

Level 3: What Should We Do Next?

- Implement winner site-wide?

- Run follow-up test?

- Test different hypothesis?

Traditional analysis stops at Level 1. AI delivers all three levels in one response.

Understanding Statistical Significance Thresholds

Not all "winners" are created equal. Here's how to interpret confidence levels:

Practical Application Example:

You're testing a new checkout flow that will replace the current one permanently (no easy rollback). Your test shows:

- Variant conversion rate: 4.2%

- Control conversion rate: 3.8%

- Relative lift: +10.5%

- P-value: 0.048 (95.2% confidence)

Decision: Don't implement yet. While this technically crosses the 95% threshold, a checkout flow is high-stakes and irreversible. Wait for 99% confidence (p < 0.01) to reduce risk. Continue running the test for another week to collect more data.

The Sample Size Reality:

*Assumes 95% confidence, 80% statistical power, and typical traffic patterns. Low-traffic pages may take significantly longer.

Key Insight: If your test needs 12,000 visitors per variant and you only get 300 visitors per day, you need 40 days to reach significance. Know your traffic volume before launching tests—or risk wasting weeks on inconclusive results.

Master Analysis Prompt Template

Use this template for any A/B test analysis:

Analyze this A/B test and provide comprehensive insights.

**Test Details:**

* Hypothesis: [What you tested and why]

* Test duration: [X days/weeks]

* Total visitors: [X]

* Device split: [X% mobile, X% desktop]

* Traffic sources: [organic/paid/direct percentages]

**Results:**

| Variant | Visitors | Conversions | Conversion Rate | Revenue (if applicable) |

|---------|----------|-------------|-----------------|------------------------|

| Control | [X] | [X] | [X%] | $[X] |

| Variant A | [X] | [X] | [X%] | $[X] |

| Variant B (if applicable) | [X] | [X] | [X%] | $[X] |

**Secondary Metrics:**

| Variant | Bounce Rate | Avg. Time on Page | Add to Cart Rate (if applicable) |

|---------|-------------|-------------------|----------------------------------|

| Control | [X%] | [X sec] | [X%] |

| Variant A | [X%] | [X sec] | [X%] |

**Provide:**

1\. **Statistical Analysis**

\- Calculate statistical significance (p-value) - Determine confidence level (90%, 95%, 99%) - Assess if sample size is adequate - Identify if we should continue testing or call a winner

2\. **Performance Interpretation**

\- Which variant won and by how much - Business impact (revenue, conversions, etc.) - Secondary metric insights - Any concerning trade-offs (e.g., higher conversion but lower engagement)

3\. **Behavioral Insights**

\- What the results suggest about user psychology - Which hypothesis elements worked - Unexpected findings or surprises

4\. **Segment Analysis** (if data provided)

\- Performance differences by device - Performance differences by traffic source - Any segment that responded differently

5\. **Next Steps Recommendations**

\- Should we implement winner site-wide? - Suggest 3 follow-up test ideas building on these results - What additional data would be valuable

Be specific with numbers and calculations. Explain reasoning for each recommendation.

Real Analysis Examples

Example 1: Product Page Trust Badge Test

Test Details:

- Hypothesis: Moving trust badges from footer to below "Add to Cart" button will increase conversions by reducing checkout anxiety

- Test duration: 14 days

- Total visitors: 3,200 (1,600 per variant)

- Device split: 68% mobile, 32% desktop

- Traffic sources: 55% organic, 30% paid, 15% direct

Results:

Statistical Significance Calculation:

Control: p₁ = 40/1600 = 0.025

Variant: p₂ = 58/1600 = 0.03625

Pooled rate: p = (40+58)/(1600+1600) = 0.03063

SE = √[p(1-p)(1/n₁ + 1/n₂)]

SE = √[0.03063 × 0.96938 × (1/1600 + 1/1600)]

SE = 0.00536

Z = (p₂ - p₁) / SE

Z = (0.03625 - 0.025) / 0.00536

Z = 2.11

P-value = 0.0349 (two-tailed)

Confidence: 96.5%

Result: Statistically significant at 95% confidence level ✓

Secondary Metrics:

All secondary metrics improved - strong signal that variant creates better user experience overall.

Understanding the Psychology Behind Trust Badges:

Trust badges work through four core psychological mechanisms:

Real Research Backing:

- Baymard Institute study: 17% of cart abandonment is due to concerns about payment security

- Nielsen Norman Group: Users spend 80% of viewing time above the fold—placing trust elements there increases visibility by 4x

- ConversionXL study: Security badges near CTAs increase conversions by an average of 42%

AI Analysis (using prompt above): 1. Statistical Analysis

The variant shows a +1.13 percentage point increase in conversion rate (+45% relative lift).

Statistical Significance Calculation:

Using a two-proportion z-test:

- Standard error: 0.00536

- Z-score: 2.11

- P-value: 0.0349

- Result: Statistically significant at 95% confidence level (p < 0.05)

Sample size is adequate (40+ conversions per variant meets minimum threshold). We have sufficient evidence to call a winner.

2. Performance Interpretation Winner: Variant A with +45% conversion rate improvement Business Impact:

- Additional 18 conversions per 1,600 visitors

- Additional revenue: $1,530 per 14-day period

- Projected monthly impact: $3,277

- Projected annual impact: $39,330

Secondary Metric Insights:

- Bounce rate decreased 3 percentage points (-6% relative) → Good signal, visitors more engaged

- Time on page increased 6 seconds (+7%) → Suggests trust badges provided needed reassurance

- Add to cart rate increased 2.9 percentage points (+35%) → Trust elements work earlier in funnel too

No concerning trade-offs. All secondary metrics moved in positive direction.

Revenue Projection Model for Scale

*Assumes $85 average order value, consistent conversion lift across products

Implementation Priority Matrix:

Priority Score = (Annual Impact / 10,000) × (4 - Effort Level)

- High effort = 1, Medium = 2, Low = 3

Rollout Timeline:

- Week 1: Best sellers (immediate high impact)

- Week 2: Mid-tier products (compound effects begin)

- Week 3-4: Long tail via template update

- Week 5+: Low-traffic products (optional, ROI-dependent)

3. Behavioral Insights

The results validate the hypothesis that checkout anxiety is a conversion barrier. Specifically:

What Worked:

- Proximity to decision point: Badges right at "Add to Cart" button removed final objections at crucial moment

- Visual prominence: Moving from footer to primary action area increased visibility dramatically

- Reassurance timing: Visitors needed trust signals BEFORE committing to cart, not after

Psychological Principles at Work:

- Loss aversion: "Free returns" message reduced perceived risk

- Authority: "Secure checkout" badge invoked security and legitimacy

- Social proof: "Free shipping $50+" suggested popular purchase threshold

Unexpected Finding:

Add to cart rate increased more than final conversion rate (+35% vs +45%). This suggests:

- Trust badges impact entire product page experience, not just checkout

- Some visitors added to cart but didn't complete checkout (opportunity for follow-up test on cart/checkout pages)

Friction Point Analysis:

The funnel data reveals where trust elements matter most:

Next Test Focus: The 32% drop-off from checkout to purchase in the variant (vs 39% in control) is still high. This indicates checkout page friction is the next optimization priority.

4. Segment Analysis

While full segment data wasn't provided, the strong performance across both mobile (68% of traffic) and desktop suggests the variant works universally. The mobile-heavy traffic split makes this result particularly valuable—mobile users typically show higher checkout anxiety.

Recommendation: Analyze mobile vs. desktop performance separately in next test to identify device-specific optimization opportunities.

Device-Specific Performance Benchmarks

Based on client data across 50+ e-commerce tests:

Why Mobile Shows Higher Impact:

- Smaller screen = less room for detailed product info = higher need for trust shortcuts

- Mobile users more likely to be browsing (vs. desktop users in "purchase mode")

- Security concerns higher on mobile due to perceived vulnerability of mobile payments

- Thumb-scrolling behavior means footer trust badges are rarely seen

5. Next Steps Recommendations Immediate Action: Implement Variant A site-wide

- Roll out to all product pages within 48 hours

- Expected site-wide impact: +$39K annual revenue from this product alone

- If applied to all products: estimate 3-5x multiplier

Follow-Up Test Ideas: Test 1: Trust Badge Styling Variations (Priority: High)

- Current badges are functional but generic

- Test: Icon style (illustrated vs. minimal), color (match brand vs. neutral), size

- Hypothesis: More branded/polished badges will perform even better

- Expected lift: Additional +10-15% on top of current winner

Test 2: Expand Trust Elements to Cart Page (Priority: High)

- We saw 35% add-to-cart increase but some drop-off before checkout

- Test: Add trust badges on cart page near "Proceed to Checkout" button

- Hypothesis: Reinforcing trust signals at each decision point compounds conversions

- Expected lift: +20% cart-to-checkout conversion

Test 3: Specificity in Trust Messaging (Priority: Medium)

- Current badges say "Free Shipping $50+"

- Test: "Free 2-Day Shipping Over $50" (more specific)

- Hypothesis: Specific promises outperform generic ones

- Expected lift: +8-12%

Additional Data to Collect:

- Mobile vs. desktop conversion rates (to optimize each separately)

- Time-to-conversion by variant (did trust badges accelerate decisions?)

- Cart abandonment recovery email performance (do trust-primed visitors respond better to email follow-ups?)

Confidence Assessment: Very High

Strong statistical significance, positive secondary metrics, clear behavioral explanation, and substantial business impact make this a clear winner. Implement immediately.

---

This analysis took approximately 7 minutes (2 min to structure data + 5 min for AI response). Compare to 2-3 hours manual analysis.

Example 2: Pricing Page Layout Test

Test Details:

- Hypothesis: Moving annual pricing toggle above plans (vs. below) will increase annual plan selection because visitors see savings before evaluating features

- Test duration: 21 days

- Total visitors: 4,800 (2,400 per variant)

- Device split: 82% desktop, 18% mobile (B2B SaaS)

- Traffic sources: 60% organic, 25% paid, 15% direct

Results:

Segment Performance Analysis:

Key Segment Insights:

- Annual plan lift consistent across all segments (+13-15pp)

- Signup decrease also consistent across segments (-5-7%)

- Desktop users more affected by price sensitivity

- Organic traffic most receptive to annual pricing

AI Prompt (Abbreviated):

Analyze this SaaS pricing page test.

[Include data above]

Note: Total trial signups decreased slightly, but annual plan selection increased substantially. Analyze whether this is a net win or loss given:

* Annual plan value: $2,400

* Monthly plan value: $200/mo (average 8 month lifecycle = $1,600)

* Difference in customer lifetime value: $800 per annual customer

Provide revenue-focused analysis.

AI Analysis: 1. Statistical Analysis Overall Signup Rate:

- Control: 4.79%

- Variant: 4.50%

- Change: -0.29 percentage points (-6% relative)

- P-value: 0.582

- Not statistically significant (p > 0.05)

Annual Plan Selection Rate (Among Sign-Ups):

- Control: 29.6% of signups chose annual

- Variant: 43.5% of signups chose annual

- Change: +13.9 percentage points (+47% relative)

- P-value: 0.032

- Statistically significant (p < 0.05)

Interpretation: While total signups decreased slightly (not statistically significant), the shift toward annual plans among those who did sign up is significant.

SaaS Pricing Test Decision Framework

When evaluating tests with competing metrics, use this framework:

Scoring Methodology:

- Cash Flow: Direct comparison of annual payment revenue

- Total Revenue: LTV-based revenue projection

- Churn Risk: Inverse relationship (lower churn = higher score)

- Renewal Rate: Industry benchmarks (annual customers renew 13pp higher)

- Expansion: Number of customers available for upsells

Real-World Context from Client Data:

Across 12 SaaS clients who've run similar annual/monthly mix tests:

*True LTV includes churn impact over time, not just simple average lifecycle calculation

Key Insight: The smaller the customer (measured by ACV), the more valuable the annual lock-in becomes. For this client (mid-market B2B with $2,400 annual plans), annual customers are worth approximately 35-40% more in true LTV when accounting for churn patterns.

2. Revenue-Focused Interpretation Control Performance:

- 115 signups total

- 34 annual ($2,400) = $81,600

- 81 monthly (avg $1,600 LTV) = $129,600

- Total estimated revenue: $211,200

Variant Performance:

- 108 signups total

- 47 annual ($2,400) = $112,800

- 61 monthly (avg $1,600 LTV) = $97,600

- Total estimated revenue: $210,400

Net Impact: -$800 (-0.4%) Winner: Essentially a tie, slight edge to Control

However, this simple calculation misses important considerations:

Factors Favoring the Variant:

1. Cash Flow Timing: Annual customers pay $2,400 upfront vs. $200/month drip. 47 annual customers = $112,800 immediate cash vs. Control's $81,600.

2. Churn Risk Reduction: Annual customers have 0% churn risk for 12 months. Monthly customers may churn before hitting 8-month average.

3. Renewal Rates: Annual customers typically renew at higher rates (78% vs. 65% for monthly).

Factors Favoring Control:

1. Higher Signup Volume: 7 more trials create more opportunities for upsell, expansion, referrals.

2. Lower Barrier: Monthly plans easier to sell, may convert better during trial period.

Cash Flow Impact Calculator

*Assumes 6% monthly churn for monthly plans, 0% churn for annual plans in first 12 months

Cash Flow Analysis: Variant provides superior early cash flow (critical for runway-constrained startups) but Control edges ahead in total cash by month 12 due to higher signup volume.

When to Choose Variant Despite Revenue Tie:

- Company has less than 12 months runway (immediate cash matters)

- Churn is higher than 8%/month (annual lock-in worth more)

- Planning a funding round (ARR from annual contracts values higher than MRR)

- Team capacity limited (fewer customers to support)

When to Choose Control:

- Strong onboarding process (can upsell monthly to annual later)

- Expansion revenue matters (need customer volume for cross-sells)

- Product-market fit still being refined (monthly gives faster feedback)

- Network effects important (more users = more value)

3. Behavioral Insights

The variant successfully primed visitors to think about annual savings BEFORE evaluating plans. This anchoring effect worked—43.5% chose annual vs. 29.6% in control.

However, leading with price savings may have introduced price sensitivity for some visitors. The -6% signup decrease (though not significant) suggests some visitors became more price-conscious when savings messaging was prominent.

Hypothesis Validation:

Partially validated. Annual selection increased as predicted, but total signup rate didn't hold constant as assumed.

4. Segment Considerations

Given the desktop-heavy audience (82%), this is typical B2B purchasing behavior—researching on desktop, often with longer consideration periods.

Recommendation: Analyze mobile separately. The 18% mobile traffic may show different patterns (mobile users less likely to commit to annual plans due to smaller screen limiting full feature comparison).

B2B SaaS Pricing Page Benchmarks

Industry data across device types and traffic sources:

Insight: This client's desktop performance (5.2% control signup rate, 31% annual mix) is at the high end of benchmarks. Mobile performance (3.1% signup, 22% annual) is also strong. This suggests the pricing page itself is well-optimized—improvements will be incremental rather than transformational.

5. Next Steps Recommendations Decision: Don't implement Variant, but don't discard either

The variant isn't a clear winner by revenue, but it demonstrates ability to shift plan selection. This suggests opportunity for more refined testing.

Follow-Up Test Ideas: Test 1: Hybrid Approach (Priority: High)

- Hypothesis: Combine Control's higher signup rate with Variant's annual shift

- Test: Keep toggle below plans (Control position), but add "Most customers save with annual plans" message above plans

- Expected outcome: Maintain 4.8% signup rate while increasing annual selection to 35-40%

- Rationale: Subtle nudge toward annual without leading with price

Test 2: Conditional Toggle Display (Priority: Medium)

- Hypothesis: Show annual toggle based on traffic source quality

- Test: High-intent traffic (branded search, direct) sees toggle above (pushes to annual). Low-intent traffic (broad keywords) sees toggle below (reduces friction)

- Expected outcome: Optimize for revenue per segment

- Rationale: Different visitors have different price sensitivity

Test 3: Savings Messaging Variation (Priority: Medium)

- Keep Variant's toggle position but test messaging

- Control: "Save 20% with annual billing"

- Variant A: "Pay for 10 months, get 12" (different frame)

- Variant B: "Most popular: Annual plans" (social proof angle)

- Hypothesis: The messaging matters more than position

- Expected outcome: Find message that shifts to annual WITHOUT reducing signups

Additional Data to Collect:

- Trial-to-paid conversion rate by plan type (are annual trials converting better?)

- Segment analysis by company size (small businesses vs. enterprise)

- Time to decision (did annual toggle above/below change consideration time?)

Conclusion:

This test revealed a tension between signup volume and plan mix optimization. The next test should find the balance point. I recommend Test 1 (hybrid approach) as the highest-probability winner.

---

This analysis demonstrates AI's ability to handle nuanced trade-offs and provide sophisticated revenue-focused recommendations.

Specialized Analysis Prompts

Multi-Variate Test Analysis

Analyze this multi-variate test with 4 variants.

**Test Details:**

[Include standard test details]

**Results for All Variants:**

| Variant | Description | Visitors | Conversions | Conv. Rate | Revenue |

|---------|-------------|----------|-------------|------------|---------|

| Control | [baseline] | [X] | [X] | [X%] | $[X] |

| Variant A | [change 1] | [X] | [X] | [X%] | $[X] |

| Variant B | [change 2] | [X] | [X] | [X%] | $[X] |

| Variant C | [change 3] | [X] | [X] | [X%] | $[X] |

**Provide:**

1\. Rank variants by performance with statistical significance

2\. Identify which specific change drove the biggest impact

3\. Recommend whether to:

\- Implement the single best variant - Combine elements from multiple variants - Run follow-up test with hybrid approach

4\. Calculate expected revenue impact of implementing winner

Multi-Variate Testing: When and How to Use

Rule of Thumb: Each additional variant increases time to significance by ~50% and requires proportionally more traffic. Only use multi-variate when you have sufficient traffic and genuinely independent hypotheses to test.

Real Example of Multi-Variate Gone Wrong:

Client wanted to test 5 headline variations + 3 CTA button colors + 2 hero image options = 30 possible combinations. At their traffic volume (1,200 visitors/day), reaching significance would take 18 months. We restructured into:

- Sequential A/B test for headline (2 weeks)

- Implement winner, test CTA colors (2 weeks)

- Implement winner, test hero images (2 weeks)

Total time: 6 weeks vs. 18 months. Results: +37% lift in 6 weeks.

Segment-Specific Performance Analysis

Analyze segment-level performance differences in this A/B test.

**Overall Results:**

[Include standard results]

**Performance by Device:**

| Variant | Mobile Conv. Rate | Desktop Conv. Rate |

|---------|-------------------|-------------------|

| Control | [X%] | [X%] |

| Variant | [X%] | [X%] |

**Performance by Traffic Source:**

| Variant | Organic | Paid | Direct |

|---------|---------|------|--------|

| Control | [X%] | [X%] | [X%] |

| Variant | [X%] | [X%] | [X%] |

**Analyze:**

1\. Which segments responded most positively to the variant?

2\. Which segments showed no difference or negative response?

3\. Should we implement variant site-wide or only for specific segments?

4\. What do segment differences reveal about user behavior?

5\. Recommend targeted follow-up tests for underperforming segments

Segment Performance Benchmarks: What's Normal vs. Concerning

Real Segment Analysis Example:

Client tested new checkout flow. Overall results showed +8% conversion lift. But segment analysis revealed:

Decision: The overall win was driven entirely by desktop, while mobile performance tanked. Investigation revealed the new checkout flow had a form field too small for mobile keyboards. We implemented the variant for desktop only and launched a separate mobile-optimized checkout test.

Lesson: Segment analysis can reveal that an "overall winner" is actually a loser for important segments. Always check device, traffic source, and new vs. return visitor segments at minimum.

Sequential Test Analysis (Building on Previous Winner)

Analyze this test which built on a previous winning variant.

**Previous Test (Baseline for This Test):**

* Test: [description of previous test]

* Winner: [which variant won]

* Lift: [X%]

* Implemented: [date]

**Current Test:**

* Hypothesis: [building on previous winner, what are we testing now?]

* Duration: [X days]

**Results:**

| Variant | Conversions | Conv. Rate | Lift vs. Original Baseline |

|---------|-------------|------------|---------------------------|

| Previous Winner | [X] | [X%] | [X%] |

| New Variant | [X] | [X%] | [X%] |

**Analyze:**

1\. Did the new variant beat the previous winner?

2\. What's the cumulative lift from original baseline? (Compound both tests)

3\. Are we seeing diminishing returns or continued optimization?

4\. Recommend whether to continue iterating on this element or test something else

5\. Suggest next test in the sequence (if continuing) or pivot direction (if plateauing)

Sequential Testing: The Compounding Returns Framework

*Cumulative impact compounds: (1 + 0.30) × (1 + 0.15) = 1.495 = 49.5% total lift, not 45%

**Example calculations shown with conservative mid-range estimates

When to Pivot:

- Three consecutive tests with <5% lift each

- Next test in sequence would take >4 weeks to reach significance

- Other page elements show higher potential impact in qualitative research

Real Sequential Testing Example:

E-commerce client optimizing product page over 6 months:

Total Impact: Nearly doubled conversion rate (2.8% → 6.33%) through sequential testing. Each test built on previous winners.

Key Insight: The compound lift (126%) is dramatically larger than the sum of individual lifts (91%). This is the power of sequential optimization—each improvement raises the baseline for the next test.

Failed Test Analysis (Learning from Losses)

Analyze this test where the variant performed worse than control.

**Test Details:**

* Hypothesis: [what we expected to improve and why]

* Expected impact: [X%]

**Results:**

| Variant | Conv. Rate | Change |

|---------|------------|--------|

| Control | [X%] | baseline |

| Variant | [X%] | [X%] (negative) |

**Secondary Metrics:**

[Include bounce rate, time on page, etc. that might explain the failure]

**Analyze:**

1\. Why did the variant fail? (Hypothesize based on data)

2\. Was the hypothesis wrong, or execution flawed?

3\. What did we learn that's valuable for future tests?

4\. Should we:

\- Test the inverse approach? - Abandon this direction entirely? - Refine and test again with modifications?

5\. Suggest 2-3 alternative hypotheses that address the same problem differently

Failed Test Learning Framework

Failed tests are often more valuable than winners—if you extract the right lessons. Use this framework:

Real Failed Test Examples and Learnings:

Key Insight: Failed tests that teach you what NOT to do are as valuable as winners that teach you what TO do. Always document failed tests with the same rigor as successful ones.

This prompt helps extract learning from failures—often more valuable than wins.

Advanced Analysis Techniques

Time-Based Pattern Analysis

Some tests show day-of-week or time-of-day effects:

Analyze this test for time-based patterns.

**Test ran for 3 full weeks (21 days).** **Performance by Week:**

| Variant | Week 1 Conv. Rate | Week 2 Conv. Rate | Week 3 Conv. Rate |

|---------|------------------|------------------|------------------|

| Control | [X%] | [X%] | [X%] |

| Variant | [X%] | [X%] | [X%] |

**Performance by Day of Week (Aggregated):**

| Variant | Mon | Tue | Wed | Thu | Fri | Sat | Sun |

|---------|-----|-----|-----|-----|-----|-----|-----|

| Control | [X%] | [X%] | [X%] | [X%] | [X%] | [X%] | [X%] |

| Variant | [X%] | [X%] | [X%] | [X%] | [X%] | [X%] | [X%] |

**Analyze:**

1\. Is performance consistent across weeks or improving/declining?

2\. Are there day-of-week patterns? (e.g., variant performs better on weekends)

3\. Could novelty effect or visitor fatigue be factors?

4\. Should we:

\- Extend test duration to confirm patterns? - Implement variant only on specific days? - Consider rotating experiences?

5\. What business insights do temporal patterns reveal?

Temporal Pattern Benchmarks: What's Normal

Real Temporal Analysis Example:

Restaurant reservation platform tested new booking flow over 4 weeks:

Pattern: Variant performance degraded over time. Investigation revealed a novelty effect—the new flow was visually distinct and caught attention initially, but users became habituated to it. By week 4, performance converged toward control.

Decision: Don't implement variant. The initial lift was temporary, not sustainable.

Day-of-Week Analysis:

Pattern: Variant performs best on high-traffic days (Friday-Sunday). Users booking weekend reservations respond better to streamlined flow, while weekday users (often browsing for future dates) are less time-sensitive.

Decision: Implement variant on Friday-Sunday only, keep control Monday-Thursday. This segmented approach captures 80% of the lift with 100% confidence (no risk of degradation for weekday traffic).

Cohort-Based Analysis

For tests affecting return visitors:

Analyze this test comparing new vs. returning visitor performance.

**New Visitors:**

| Variant | Visitors | Conv. Rate |

|---------|----------|------------|

| Control | [X] | [X%] |

| Variant | [X] | [X%] |

**Returning Visitors:**

| Variant | Visitors | Conv. Rate |

|---------|----------|------------|

| Control | [X] | [X%] |

| Variant | [X] | [X%] |

**Analyze:**

1\. Which cohort responded better to the variant?

2\. If new visitors and returning visitors show opposite results, what does that mean?

3\. Should we implement different experiences for each cohort?

4\. What does this reveal about customer journey and decision-making?

5\. Recommend cohort-specific follow-up tests

New vs. Return Visitor Benchmarks

When New and Return Visitors Show Opposite Results:

This usually indicates one of three scenarios:

Real Cohort Analysis Example:

SaaS company tested new onboarding flow:

Investigation: The new onboarding flow included detailed tutorial steps that helped first-time users but frustrated return visitors who already knew the product. Return visitors abandoned when forced through unnecessary tutorials.

Solution: Implement variant for new visitors only. Add "Skip tutorial" option for return visitors. Result: +53% lift for new visitors maintained, return visitor conversion recovered to baseline, overall lift increased to +31%.

Building Your Analysis Library

Document every test analysis to build organizational knowledge:

Template:

## Test: [Name] - [Date]

### Hypothesis

[What we tested and expected outcome]

### Results Summary

* Winner: [Control / Variant X]

* Lift: [X%]

* Statistical Significance: [Yes/No, X% confidence]

* Business Impact: $[X] [monthly/annual]

### Key Insights

1\. [Primary learning]

2\. [Secondary learning]

3\. [Unexpected finding]

### What Worked

* [Specific element that drove success]

* [Psychological principle validated]

### What Didn't Work

* [Elements that had no impact or negative impact]

### Follow-Up Tests Launched

1\. [Test name and date launched]

2\. [Test name and date launched]

### AI Analysis Prompt Used

[Link to or copy of exact prompt]

### Full AI Response

[Complete AI analysis for reference]

Over time, this library becomes your competitive advantage—you know what works in your context.

Building a Test Library That Actually Gets Used

Most companies create test documentation that nobody reads. Here's how to build a library that becomes your competitive advantage:

Essential Fields for Every Test:

Tagging System for Pattern Recognition:

How to Surface Patterns:

After 20+ tests, start running queries like:

- "Show me all #social-proof tests on #product-page" → Do testimonials consistently win here?

- "Show me all #mobile #headline tests" → What headline patterns work on mobile?

- "Show me all #winner tests with #urgency tactics" → Does urgency consistently drive lifts?

Real Pattern Discovery Example:

After documenting 47 tests over 6 months, an e-commerce client discovered:

- Pattern 1: All 8 tests using #specificity in copy won (e.g., "2-day shipping" vs. "fast shipping")

- Pattern 2: All 6 tests adding #social-proof won on #product-page but only 2/5 won on #homepage

- Pattern 3: All 4 tests using #loss-aversion framing (#scarcity, limited-time) won on #paid traffic but only 1/4 won on #organic

Actionable Playbook Created:

- Always use specific language (proven winner)

- Use social proof on product pages, not homepage

- Use urgency/scarcity messaging for paid traffic, not organic

Result: New tests informed by these patterns had a 78% win rate vs. 41% before pattern recognition.

Common Analysis Mistakes

Mistake 1: Calling Winners Too Early

Symptom: Test reaches 85% confidence, you declare winner Why It's Wrong: 85% confidence = 15% chance results are random noise Fix: Always wait for 95% confidence minimum. Use this prompt:

My test shows 87% statistical confidence after 1 week.

Traffic: [X]/day

Conversions: [X] control, [X] variant

Current lift: [X%]

Should I:

A) Call it a winner now

B) Wait for 95% confidence

C) Extend test duration

How many more days/conversions do I need to reach 95% confidence?

Mistake 2: Ignoring Secondary Metrics

Symptom: Variant has higher conversion but worse engagement Why It's Wrong: May attract lower-quality conversions or harm long-term metrics Fix: Always include secondary metrics in analysis prompt:

Variant shows +20% conversion increase, but:

* Bounce rate increased from 45% to 58%

* Time on page decreased from 120 sec to 75 sec

* Return visitor rate decreased from 22% to 14%

Is this a real win or Pyrrhic victory? Analyze trade-offs and recommend decision.

Mistake 3: No Follow-Up Test Plan

Symptom: Test ends, you implement winner, then... nothing Why It's Wrong: Optimization is iterative. Every test should lead to next test. Fix: Always ask AI for follow-up recommendations:

[Include test results]

Based on these results, suggest 3 follow-up tests that:

1\. Build on what worked

2\. Address what didn't work

3\. Explore adjacent opportunities

Rank by expected impact and ease of implementation.

The 7 Deadly Sins of Test Analysis

Real Cost Example:

A B2B client committed all 7 sins on a single pricing page test:

- Peeked after 3 days, saw variant "winning" at 82% confidence

- Focused on organic traffic segment (+31%) while ignoring paid traffic (-18%)

- When conversion rate wasn't significant, switched to analyzing email signup rate (which was)

- Never checked mobile (where variant was -24%)

- Calculated they needed 5,000 visitors but only ran test with 1,800

- Didn't document why they thought it would work

- Tested 6 variants simultaneously, each getting ~300 visitors

Result: Implemented a "winner" that actually decreased overall conversions by 9% and mobile conversions by 24%. Took 6 weeks to realize the mistake. Estimated cost: $87,000 in lost revenue.

The Fix: Instituted a pre-test checklist and post-test review process using AI prompts. Win rate improved from 34% to 68% within 3 months.

Real Client Example: Complete Analysis Workflow

Client: B2B SaaS, project management tool Test: Pricing page CTA copy variation Test Details:

- Duration: 18 days

- Traffic: 6,400 visitors

- Variants: 3 (control + 2 test variants)

Control: "Start Free Trial" Variant A: "Start 14-Day Free Trial" Variant B: "Try Free for 14 Days - No Credit Card" Raw Results:

- Control: 2,133 visitors, 89 trials (4.17%)

- Variant A: 2,134 visitors, 96 trials (4.50%)

- Variant B: 2,133 visitors, 114 trials (5.34%)

Analysis Time with AI: 6 minutes Analysis Time without AI: 2+ hours AI Key Insights:

1. Variant B is clear winner (+28% lift, 99% confidence)

2. Specificity matters: "14 days" outperformed generic "free trial"

3. Risk reversal works: "No credit card" addressed key objection

4. Variant A's modest lift (+8%) suggests length alone isn't enough—need to address risk

Follow-Up Tests Suggested by AI:

1. Test "No Credit Card" on hero CTA (currently only on pricing page)

2. Add "No credit card required" below all CTA buttons throughout site

3. Test extended trial: "Try Free for 21 Days" (pushing further)

Implementation:

- Rolled out Variant B site-wide (day 19)

- Launched hero CTA test with "no credit card" messaging (day 22)

- Expected annual impact: +$285K ARR from compound effects

ROI on AI Analysis: Saved 2 hours per test × 15 tests/month = 30 hours/month saved

90-Day Case Study: Scaling from 3 to 18 Tests/Month

Client Background: Mid-market SaaS ($4.2M ARR), 85K monthly website visitors, 1-person growth team

Starting State (Month 0):

- Tests per month: 3

- Analysis time per test: 2.5 hours

- Total analysis time: 7.5 hours/month

- Win rate: 38%

- Average lift per winner: 12%

After AI Analysis Implementation:

Key Learnings:

- Time Saved: Analysis time reduced by 85% (2.5 hours → 18 minutes)

- Capacity Unlocked: Same person running 6x more tests

- Quality Improved: Win rate increased 53% (38% → 58%) due to better pattern recognition

- Compounding Returns: Each test informed next tests, creating accelerating lift

90-Day Outcome:

- Monthly recurring revenue increase: $47,600 (+1.13% of ARR per month)

- Projected annual impact: $571,200

- Cost of AI tools: $0 (using Claude)

- Net ROI: Infinite (time investment recouped through efficiency)

Scaling Analysis for 10+ Monthly Tests

When running 10-20 tests monthly, standardize your analysis workflow:

Weekly Analysis Sprint (90 minutes total): Monday (30 min):

- Export data from all active tests

- Run AI analysis prompts for completed tests

- Flag tests reaching significance for implementation

Wednesday (30 min):

- Review AI recommendations

- Prioritize follow-up tests using ICE framework

- Generate variant copy for next week's launches

Friday (30 min):

- Document learnings in test library

- Share top insights with team

- Launch queued tests

This routine lets one person manage 15+ concurrent experiments rigorously.

ICE Framework for Prioritizing Follow-Up Tests

When AI suggests 3-5 follow-up tests, use ICE scoring to prioritize:

*ICE Score = (Impact × Confidence × Ease) / 100

Scoring Guidelines:

Impact (1-10): Expected business impact

- 10: Could 2x conversion rate

- 7-9: Could achieve 30-100% lift

- 4-6: Could achieve 10-30% lift

- 1-3: Could achieve <10% lift

Confidence (1-10): How sure are you it will work?

- 10: Proven winner on similar pages, backed by research

- 7-9: Strong hypothesis, some supporting data

- 4-6: Reasonable hypothesis, no direct evidence

- 1-3: Speculative, weak hypothesis

Ease (1-10): Implementation effort

- 10: Copy change only, <30 min implementation

- 7-9: Design change, <1 day implementation

- 4-6: Frontend dev required, 1-3 days

- 1-3: Backend changes, cross-team dependencies, >1 week

Real Prioritization Example:

After winning trust badge test, AI suggested 5 follow-ups:

Outcome: Launched top 3 tests in parallel (different pages, no traffic conflict). All three won:

- Cart page badges: +23% cart-to-checkout conversion

- Badge design: +11% additional lift on product pages

- Checkout badges: +18% checkout completion

Compound Impact: (1 + 0.45) × (1 + 0.23) × (1 + 0.11) × (1 + 0.18) = 2.27× improvement = 127% cumulative lift from original baseline.

Next Steps: Close the Experimentation Loop

You've learned to analyze test results in minutes with AI. The rapid experimentation loop is complete:

1. Generate hypotheses (see: Generating Test Hypotheses with AI)

2. Create variants (see: Rapid Landing Page Variants)

3. Set up tests (see: Setting Up Your First AI-Powered A/B Test)

4. Analyze results (this guide)

5. Repeat with follow-up tests

Your Action Plan: This Week:

- Take your most recent test results

- Run the Master Analysis Prompt

- Implement winning variant

- Launch recommended follow-up test

Next 30 Days:

- Analyze every test within 24 hours of reaching significance

- Document all learnings in test library

- Build to 8-10 concurrent tests with rigorous analysis

Next 90 Days:

- Review test library for patterns

- Create "greatest hits" playbook of proven winners

- Train team on AI-powered analysis workflow

- Scale to 15-20 monthly tests

The teams winning at optimization aren't smarter—they're faster. AI-powered analysis removes the bottleneck between test completion and next test launch.

Start analyzing your most recent test today. You'll be running a full velocity experimentation program within a month.

The Experimentation Maturity Model

Where are you on the experimentation maturity curve?

Time to Level Up:

- Level 0 → Level 1: 1 month (launch first test)

- Level 1 → Level 2: 2-3 months (establish cadence with AI)

- Level 2 → Level 3: 3-4 months (build library, recognize patterns)

- Level 3 → Level 4: 4-6 months (scale operations, cross-team buy-in)

- Level 4 → Level 5: 6-12 months (cultural transformation)

Most companies never get past Level 1-2. AI-powered analysis is the unlock that enables progression to Level 3-4.

Real Progression Example:

Total Annual Impact: $999K from experimentation program enabled by AI analysis.

--- About WE•DO Worldwide

We're a bolt-on marketing team executing 15-20 A/B tests per month using AI-accelerated workflows. Our clients see 25-40% conversion improvements within 90 days through rapid experimentation and rigorous analysis. Learn more about our growth marketing services.

Ready to Transform Your Growth Strategy?

Let's discuss how AI-powered marketing can accelerate your results.