The average email marketer tests two subject lines per campaign: Option A and Option B. One copywriter drafts both. The team picks their favorite. The campaign launches.

This approach leaves performance on the table. What if the best subject line was Option F—the one nobody thought to test because brainstorming stopped at two?

GPT-4 changes the economics of subject line testing. Instead of two variants, generate 50. Instead of gut-feel decisions, systematically test patterns. Instead of incremental improvements, discover combinations that triple open rates.

This isn't about replacing human judgment. It's about expanding the possibility space so your judgment operates on better options.

Why Subject Lines Deserve Obsessive Testing

Subject lines control email program success more than any other variable.

The Conversion Funnel:

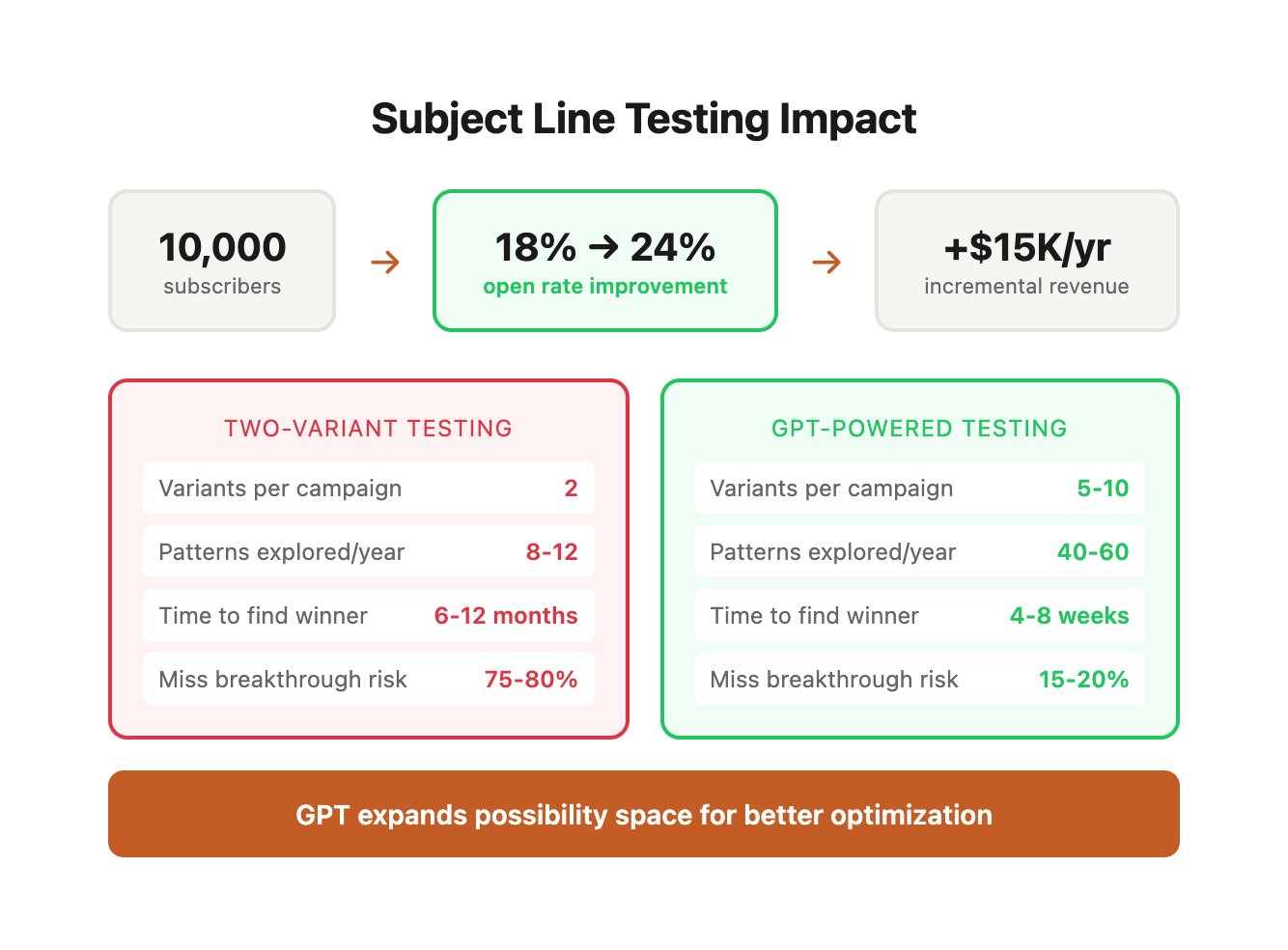

10,000 subscribers

↓ 18% open rate (1,800 opens) ← Subject line controls this

↓ 12% click rate (216 clicks)

↓ 4% conversion rate (8 conversions)

If you improve open rate from 18% to 24% (a 33% relative increase), you get:

10,000 subscribers

↓ 24% open rate (2,400 opens) ← +600 opens

↓ 12% click rate (288 clicks) ← +72 clicks

↓ 4% conversion rate (11 conversions) ← +3 conversions

For an e-commerce brand with $100 average order value, that's $300 additional revenue per campaign—with zero increase in list size or acquisition cost.

Multiply across 50 campaigns per year: $15,000 in incremental revenue from subject line optimization alone.

Most email programs underoptimize here because:

-

Creative bandwidth is limited—marketers prioritize email body over subject lines

-

Traditional testing (2-3 variants per campaign) yields slow learning

-

Results don't transfer well between campaigns without pattern analysis

-

High-performing formulas are discovered accidentally, not systematically

AI-powered testing solves all four problems.

The Hidden Cost of Traditional Testing

Let's examine what happens when you test only two subject lines per campaign across a year:

Traditional Approach Performance Profile:

The problem isn't just that you test fewer variants—it's that your learning compounds slowly. With traditional testing, you might discover that "urgency + numbers" outperforms generic statements after six months of trial and error. With systematic GPT testing, you identify this pattern in week three and spend the next five months refining it.

Real-World Learning Velocity Comparison:

After 90 days of email campaigns (assuming 2 sends per week, 26 total campaigns):

AI-powered testing doesn't just give you more data points—it accelerates the pattern recognition feedback loop that drives compounding improvements.

The Psychology of Subject Line Performance

Understanding why certain subject lines outperform others requires examining the cognitive processing that happens in the 0.3-0.7 seconds a recipient decides whether to open an email.

The Inbox Scanning Process:

- Visual Pattern Recognition (50-100ms): Brain identifies sender name, subject line length, and visual elements (emojis, brackets, numbers)

- Semantic Processing (200-300ms): Brain extracts meaning from first 30-40 characters

- Relevance Assessment (100-200ms): Subconscious evaluation: "Does this matter to me right now?"

- Action Decision (50-100ms): Open, skip, or delete

Total decision time: 400-700 milliseconds

This means your subject line must accomplish three objectives in less than one second:

- Pass visual filters (stand out from surrounding emails)

- Communicate immediate value (answer "why should I care?")

- Trigger emotional response (curiosity, urgency, desire, fear-of-missing-out)

Cognitive Triggers That Drive Opens:

Understanding these triggers allows you to engineer subject lines that exploit multiple psychological principles simultaneously.

Multi-Trigger Combination Performance:

The highest-performing subject lines typically activate 2-3 psychological triggers simultaneously. Single-trigger subject lines ("20% off sale") perform 15-30% worse than multi-trigger variants ("20% off ends midnight—your cart is waiting").

AI-powered testing allows you to systematically explore trigger combinations that human brainstorming might never surface.

The GPT-Powered Testing Framework

This five-step process takes you from zero to systematically optimized subject lines in 30 days.

Step 1: Audit Historical Performance (2-3 hours)

Before generating new variants, understand what's already worked.

Export your last 100 campaigns from your email platform (Klaviyo, Mailchimp, HubSpot, etc.) with these fields:

- Campaign name

- Subject line

- Send date

- List size

- Open rate

- Click rate

Pattern Analysis Framework:

Sort by open rate (highest to lowest). Study your top 20 performers. Look for patterns:

Pattern Performance Summary Table:

Document your findings like this:

Key Insights from Analysis:

Mobile vs. Desktop Performance Variance:

The device your subscribers use significantly impacts which subject line patterns work best. Analyze your list's mobile percentage (most ESPs show this metric) and adjust accordingly:

Example Mobile Optimization:

Desktop-optimized: "Exclusive members-only early access to our new spring collection starts tomorrow"

Mobile-optimized: "🌸 Early access: New spring collection tomorrow"

The mobile version communicates the same core value in 36 characters vs. 82—ensuring the full message displays on smartphone screens.

This audit becomes your baseline. Your goal: beat these patterns through systematic testing.

Step 2: Define Testing Framework (1 hour)

Effective testing requires structure. Don't generate random variants—test specific hypotheses.

Framework Components:

A. Pattern Categories to Test

Choose 4-6 categories from your audit that showed promise:

-

Questions vs. statements

-

Urgency-driven vs. curiosity-driven

-

Short vs. long

-

Numbers vs. no numbers

-

Emoji inclusion vs. text-only

-

Personalization vs. generic

B. Testing Schedule

With a 50,000-person list sending 2 campaigns per week, plan:

- Week 1-2: Test pattern categories (8 campaigns, 4 patterns tested twice)

- Week 3-4: Test winning patterns with variations (8 campaigns, refine winners)

- Week 5+: Implement best performers, test refinements

C. Sample Size and Statistical Significance

Calculate minimum detectable effect based on your list size.

For a 50,000-person list split into 5 test groups (10,000 per variant):

- Baseline open rate: 20%

- Minimum detectable lift: 2 percentage points (10% relative improvement)

- Confidence level: 95%

- Statistical power: 80%

Use an A/B test calculator (Evan Miller's tool or Optimizely's calculator) to verify your sample sizes are sufficient.

Statistical Significance Lookup Table:

Use this table to determine how many variants you can reliably test given your list size:

Key:

- ✓ = Statistically valid with 95% confidence

- ⚠ = Valid but requires larger effect to detect

- ✗ = Not recommended (insufficient sample size)

Smaller lists: If you have fewer than 10,000 subscribers, test fewer variants per campaign (3 instead of 5) to maintain statistical power.

Testing Frequency and Learning Rate:

Higher send frequency accelerates learning. If you only send weekly, expect 8-10 weeks to identify high-confidence patterns. Daily senders can achieve the same confidence in 2-3 weeks.

D. Segmentation Strategy

Not all subscribers respond identically to subject line patterns. Define segments before testing to uncover segment-specific preferences:

Example Multi-Segment Test Design:

Campaign: New product launch

Testing the same campaign across segments reveals whether patterns are universal or segment-specific—critical knowledge for scaling performance improvements.

Step 3: Generate Variants with GPT-4 (30 minutes per campaign)

Now the acceleration begins. Instead of brainstorming 2-3 subject lines, generate 50 in 30 minutes.

Structured Prompt Template:

You are an email marketing copywriter specializing in subject line optimization for [industry/niche]. Our brand voice is [voice description]. Our audience is [demographic and psychographic details].

Campaign goal: [e.g., promote 20% off sale, announce new product, nurture lead segment]

Email body preview: [1-2 sentence summary]

Target audience: [list segment details]

Generate 10 email subject lines with these parameters:

Pattern: [Questions/Commands/Statements/Curiosity]

Tone: [Direct/Conversational/Urgent/Playful]

Length: [Short: 30 chars / Medium: 30-50 chars / Long: 50+ chars]

Include: [Numbers/Brackets/Emojis/Personalization - specify which]

Subject lines should:

1. Create immediate interest or urgency

2. Align with brand voice

3. Avoid spam trigger words (free, buy now, limited time - unless strategically necessary)

4. Work synergistically with preview text: "[Your preview text here]"

Output format:

1. [Subject line]

2. [Subject line]

[continue through 10]

Example: E-commerce Flash Sale Campaign

Prompt:

You are an email marketing copywriter for "Peak Fitness," an activewear e-commerce brand. Our voice is motivational and energetic. Our audience is 25-45-year-old fitness enthusiasts who value quality and performance.

Campaign goal: Promote 24-hour flash sale (30% off all apparel)

Email body preview: New season styles at 30% off—marathon training, gym sessions, or weekend hikes

Target audience: Engaged subscribers (opened 2+ emails in last 30 days)

Generate 10 subject lines:

Pattern: Urgency-driven

Tone: Direct and motivational

Length: Medium (30-50 characters)

Include: Specific percentage discount, time frame

Output format:

1. [Subject line]

GPT-4 Output:

-

30% Off Ends Tonight—Gear Up Now

-

[Name], 24 Hours Only: 30% Off Activewear

-

Flash Sale: Save 30% Before Midnight

-

Your 24-Hour Window: 30% Off Everything

-

Don't Miss This: 30% Off Ends Tomorrow

-

Last Call—30% Off All Activewear Tonight

-

24 Hours Left: Take 30% Off Your Cart

-

Midnight Deadline: 30% Off Peak Apparel

-

Final Hours for 30% Off—Stock Up Now

-

Time's Running Out: 30% Off Flash Sale

Run this prompt 5 times with different pattern/tone combinations:

- Round 1: Urgency-driven, direct, medium length

- Round 2: Curiosity-driven, playful, short length

- Round 3: Question-based, conversational, medium length

- Round 4: Command-based, urgent, short length

- Round 5: Value-focused, professional, long length

You now have 50 variants. Export to a spreadsheet.

Advanced Prompt Engineering Techniques:

The quality of your GPT-generated subject lines depends heavily on prompt structure. Here are proven enhancements:

1. Competitive Context Injection:

Before generating subject lines, here are examples from our top 3 competitors:

Competitor A: "Summer Sale: Up to 50% Off"

Competitor B: "New Arrivals Just Dropped 🔥"

Competitor C: "Your Exclusive Member Discount Inside"

Generate subject lines that differentiate from these approaches while remaining on-brand.

This ensures your variants don't blend into the competitive landscape.

2. Historical Winner Integration:

Our highest-performing subject lines from the last 90 days:

- "You left something behind (+ 20% off to complete your order)" - 34.2% open rate

- "[Name], your personalized recommendations are ready" - 31.8% open rate

- "Only 6 hours left: Flash sale ends at midnight" - 29.4% open rate

Analyze what makes these successful. Generate 10 new variants that incorporate these winning elements while introducing fresh variations.

This creates an iterative improvement loop—each generation builds on proven patterns.

3. Anti-Pattern Specification:

Avoid these patterns that historically underperform for our audience:

- Generic discount announcements without urgency

- Vague curiosity without clear value preview

- Subject lines over 60 characters

- Multiple exclamation marks or all-caps words

Generate variants that maintain energy and interest while avoiding these pitfalls.

Explicitly stating what NOT to do prevents GPT from generating variants you'll immediately reject.

4. Preview Text Co-Generation:

For each subject line, also generate a complementary preview text (60-90 characters) that:

- Extends the subject line message

- Provides additional context or urgency

- Creates a complete thought when combined with subject line

- Includes a soft CTA

Output format:

Subject: [Subject line]

Preview: [Preview text]

Testing subject + preview combinations as unified variants often reveals that a mediocre subject line with perfect preview text outperforms a great subject line with weak preview text.

GPT-4 vs. Claude vs. Copy.ai: Platform Comparison

Recommendation: Use GPT-4 for primary generation, then cross-validate top performers through Claude to ensure brand voice alignment.

Step 4: Curate and Prioritize (30 minutes)

50 variants is too many for one campaign. Narrow to your top 5-10 for testing.

Curation Criteria:

-

Brand Alignment: Does it sound like your brand? Eliminate variants that feel off-voice.

-

Clarity: Is the value proposition immediately clear? Eliminate confusing or vague options.

-

Spam Filter Risk: Avoid all-caps, excessive punctuation, or known trigger phrases.

-

Uniqueness: Eliminate near-duplicates (e.g., "30% Off Ends Tonight" and "30% Off Ends Today" are functionally identical).

-

Hypothesis Alignment: Does this test your defined pattern categories? Eliminate outliers that don't map to your testing framework.

Prioritization Matrix:

Score each variant 1-5 on:

- Potential Impact: How different is this from your typical approach?

- Brand Fit: How well does it align with voice guidelines?

- Clarity: How obvious is the value proposition?

Multiply scores: variants with 75+ (out of 125) advance to testing.

Example Scoring:

Select your top 5 scorers for deployment.

Spam Filter Pre-Check:

Before deploying, run your top variants through spam scoring tools:

Common Spam Triggers to Avoid:

Step 5: Deploy and Measure (Campaign day, 2-3 days for results)

Most email platforms support multivariate subject line testing natively.

Klaviyo Setup:

-

Create campaign as normal

-

Navigate to "A/B Test" settings

-

Select "Subject Line Test"

-

Add up to 10 variants

-

Set test size: 50% of list (25,000 people)

-

Split test group evenly: 5,000 people per variant

-

Winner determination: Highest open rate after 4 hours

-

Winner automatically sends to remaining 50%

Mailchimp Setup:

-

Create campaign, navigate to "A/B Testing"

-

Choose "Subject Line" as test variable

-

Add variants (up to 3 on standard plans, 8 on premium)

-

Test percentage: 50%

-

Winning metric: Opens

-

Wait duration: 4 hours

-

Send winner to remainder

Manual Testing (for platforms without native support):

-

Segment list into 5 equal groups (10,000 each for 50k list)

-

Create 5 separate campaigns, each with one subject line variant

-

Send simultaneously to each segment

-

Wait 24-48 hours for open rate stabilization

-

Manually implement winner for next campaign

Tracking and Documentation:

Create a testing log spreadsheet:

Track at campaign level and aggregate at pattern level.

After 10 campaigns, calculate average performance by pattern:

High-confidence winners become your default approach.

Advanced Measurement: Time-Decay Analysis

Open rates aren't static—they evolve over time. Understanding this temporal pattern helps you determine optimal winner selection timing.

Typical Open Rate Accumulation Pattern:

Winner Selection Timing Strategy:

Selecting winners too early (1-2 hours) introduces noise. Waiting 4+ hours provides 75-85% of final open data with high statistical confidence.

Real-World Results: 3 Case Studies

Case Study 1: D2C Subscription Box (Food/Snacks)

Challenge: Open rates plateaued at 16-18% for monthly box announcement emails.

Hypothesis: Subscribers were fatigued by predictable "Your Box Ships Soon" subject lines.

Test Setup:

- Generated 60 variants across 6 pattern categories

- Tested 5 variants per campaign over 12 monthly sends

- List size: 42,000 active subscribers

Pattern Categories Tested:

-

Product-focused ("5 Artisan Cheeses in This Month's Box")

-

Curiosity-driven ("You're Not Ready for What's Inside")

-

Member-exclusive framing ("[Name], Your Members-Only Box Awaits")

-

Urgency-based ("Ships Tomorrow—Here's What's Coming")

-

Social proof ("12,000 Members Loved Last Month's Selection")

-

Question-based ("Guess What's in Your January Box?")

Results:

Winner: Curiosity-driven subject lines consistently outperformed. The team adopted this as the primary pattern, with question-based variants as secondary option.

Revenue Impact:

- Baseline: 17% open rate, 4.2% click rate, $84,000 monthly revenue from email

- New performance: 26% open rate, 5.1% click rate, $128,000 monthly revenue

- Incremental monthly revenue: $44,000

- Annual impact: $528,000

Time Investment: 45 minutes per campaign (variant generation and setup). Total: 9 hours over 12 months for $528K lift.

Deeper Insight—Why Curiosity Won:

The team analyzed the psychology behind the curiosity pattern's success:

-

Subscription fatigue: After 6+ months, subscribers knew the format. "Your box ships tomorrow" became predictable.

-

Surprise optimization: Curiosity-driven subject lines reframed the expected ("your monthly box") as unexpected ("you're not ready for this").

-

Self-selection: Subscribers who opened curiosity-driven emails were more engaged, creating a virtuous cycle of higher click-through and lower churn.

Secondary Discovery—Segmentation by Tenure:

This led to a tenure-based segmentation strategy where new members received product-focused subject lines while established members got curiosity-driven variants—optimizing both groups simultaneously.

Case Study 2: B2B SaaS (Project Management Tool)

Challenge: Low open rates (12-14%) on feature announcement and educational emails.

Hypothesis: Subject lines were too product-focused. Subscribers cared more about outcomes than features.

Test Setup:

- Generated 40 variants emphasizing outcomes, not features

- Tested 5 variants per campaign over 8 sends (bi-weekly)

- List size: 18,000 qualified leads and trial users

Pattern Categories Tested:

-

Feature-centric ("New Gantt Chart View Launched")

-

Outcome-centric ("Ship Projects 23% Faster with This Update")

-

Pain-point focused ("Tired of Messy Project Handoffs?")

-

Time-saving emphasis ("Save 5 Hours Per Week on Status Updates")

-

Competitive framing ("What [Competitor] Can't Do—We Just Added")

Results:

Winner: Time-saving emphasis. Quantified time savings in subject line drove highest engagement.

Conversion Impact:

- Trial-to-paid conversion rate increased from 8.2% to 11.4% (email-attributed conversions)

- 220 additional conversions over 4 months

- At $49/month average plan: $10,780 monthly recurring revenue added

Key Insight: B2B buyers care about ROI and time savings. Feature descriptions belong in the email body, not the subject line.

Detailed Performance Breakdown by Buyer Stage:

The team segmented their list by buyer journey stage and discovered pattern performance varied dramatically:

Actionable Implementation:

Based on this data, the team implemented a dynamic subject line strategy:

- Early-stage leads: Outcome-centric subject lines ("Ship projects 23% faster")

- Active/late trial users: Time-saving emphasis ("Save 5 hours per week on status updates")

- Churned trials: Aggressive time-saving + competitive framing ("We added what [Competitor] can't do—save 5 hours weekly")

This segmentation approach increased overall trial-to-paid conversion from 8.2% to 12.1%—a 48% relative improvement.

Case Study 3: E-commerce (Home Decor)

Challenge: Abandoned cart emails had 22% open rate—decent, but cart recovery rate was only 6%.

Hypothesis: Subject lines weren't addressing the core objection (price/indecision).

Test Setup:

- Generated 30 variants addressing common objections

- Tested 5 variants across 6 abandoned cart campaigns

- Audience: 12,000 cart abandoners over 30 days

Pattern Categories Tested:

-

Generic reminder ("[Name], You Left Items Behind")

-

Discount incentive ("Complete Your Order & Save 15%")

-

Scarcity-driven ("Your Cart Items Are Selling Out Fast")

-

Social proof ("2,400 Customers Love What's in Your Cart")

-

Removal threat ("Your Cart Expires in 2 Hours")

-

Question-based ("Still Thinking About Your Cart?")

Results:

Winner: Scarcity-driven subject lines (inventory warnings, expiration timers) drove both highest opens and recovery rates.

Revenue Impact:

- Baseline: 5.8% recovery rate on $120 average cart value

- New performance: 10.7% recovery rate

- 12,000 abandoners × 4.9% additional recovery × $120 AOV = $70,560 recovered revenue over 30 days

- Annual projection: $846,720

Key Insight: Abandoned cart emails benefit from urgency. Customers who abandon aren't ignoring you—they're procrastinating. Deadline-driven subject lines convert indecision into action.

Advanced Discovery—Multi-Touch Sequence Testing:

The team didn't stop at single-send optimization. They tested three-email sequences with different subject line progressions:

Sequence A (Progressive Urgency):

- First email (+1 hour): "Still thinking about your cart?"

- Second email (+24 hours): "Your cart items are selling out"

- Third email (+48 hours): "Final notice: Cart expires in 2 hours"

Sequence B (Discount Escalation):

- First email (+1 hour): "You left items behind"

- Second email (+24 hours): "Complete your order & save 10%"

- Third email (+48 hours): "Last chance: 15% off your cart"

Sequence C (Mixed Strategy):

- First email (+1 hour): "Your cart items are selling out fast"

- Second email (+24 hours): "Complete your order & save 10%"

- Third email (+48 hours): "Cart expires in 2 hours + 15% off"

Sequence Performance:

Winner: Sequence C (mixed strategy) balanced urgency and incentive, driving highest recovery without excessive discount dependency.

Critical Nuance—Discount Dependency Risk:

The team noticed that Sequence B (discount escalation) created a behavioral pattern where some customers intentionally abandoned carts to receive discount codes. To test this hypothesis, they analyzed repeat purchase behavior:

Sequence B doubled the abandonment training rate—customers learned that abandoning carts = bigger discounts. Sequence C balanced recovery with sustainable behavior, making it the long-term winner despite slightly lower immediate recovery rates.

Advanced Tactics: Scaling Beyond Basics

Once you've mastered the core framework, implement these advanced strategies:

Tactic 1: Audience Segmentation Testing

Don't assume one subject line works universally. Segment by behavior and test accordingly.

Behavioral Segments:

- High engagers: Opened 5+ emails in last 30 days (test bold, creative subject lines)

- Low engagers: Opened 0-1 emails in last 30 days (test direct, value-driven subject lines)

- Recent purchasers: Bought within 14 days (test product-related, upsell subject lines)

- Long-time subscribers: Joined 12+ months ago (test loyalty/insider framing)

Generate segment-specific variants with tailored GPT prompts:

Audience segment: Low engagers (opened 0-1 emails last 30 days)

Challenge: Re-engage dormant subscribers without unsubscribes

Tone: Direct value proposition, no fluff

Generate 10 re-engagement subject lines emphasizing immediate value.

Comprehensive Segmentation Matrix:

Dynamic Content Strategy:

Advanced email platforms (Klaviyo, HubSpot, Braze) support dynamic subject lines based on subscriber data. Implement variable insertion for hyper-personalization:

Pattern: "[Name], {dynamic_benefit} in {time_frame}"

Variables:

- dynamic_benefit: Pulled from browsing history or past purchases

- time_frame: Calculated based on average decision cycle

Examples:

- "Sarah, save $120 on outdoor gear this week"

- "Mike, your 30% discount expires in 6 hours"

- "Jessica, 5 new arrivals matching your style"

This level of personalization typically drives 8-15% higher open rates than static subject lines, but requires robust data infrastructure.

Tactic 2: Emoji Testing Framework

Emojis are polarizing. Some audiences love them; others find them unprofessional.

Test systematically:

-

Baseline: Text-only subject line

-

Variant A: Emoji at start (🎉 Big Sale Inside)

-

Variant B: Emoji at end (Big Sale Inside 🎉)

-

Variant C: Emoji mid-line (Big 🔥 Sale Inside)

-

Variant D: Multiple emojis (🎉 Big Sale 🔥 Inside 🛍️)

Track not just open rate, but unsubscribe rate. If emoji usage increases unsubscribes by 50%, abandon it—even if opens increase.

Emoji Selection Best Practices:

- Match email content (don't use 🎉 for a serious product recall notice)

- Avoid overused emojis in your niche (every e-commerce flash sale uses 🔥)

- Test niche-specific emojis (🏋️ for fitness, 🍴 for food, 📊 for B2B data)

Comprehensive Emoji Performance Data:

Critical Discovery—Mobile vs. Desktop Emoji Rendering:

Emojis render differently across devices and email clients. Test thoroughly before deployment:

Emoji Fatigue Analysis:

Using emojis in every subject line reduces their effectiveness through habituation:

Recommendation: Reserve emojis for high-priority campaigns (major sales, launches, urgency-driven emails) to maintain their effectiveness.

Tactic 3: Preview Text Synergy

Most marketers ignore preview text. This is the 35-90 characters displayed after the subject line in inbox previews.

Poor execution:

Subject: "Flash Sale Starts Now"

Preview: "View this email in your browser | Unsubscribe"

Strong execution:

Subject: "Flash Sale Starts Now"

Preview: "30% off activewear—24 hours only. Shop best-sellers before they're gone."

Generate preview text variants alongside subject lines:

Subject line: "[Name], 24 Hours Only: 30% Off Activewear"

Generate 5 preview text options that:

1. Extend the urgency message

2. Add specific product examples

3. Include a clear CTA

4. Work together with subject line to form complete thought

Length: 60-90 characters

Test subject line + preview text as combined units. Variant A might have the best subject line, but Variant C's subject + preview combination drives higher engagement.

Subject + Preview Combination Strategies:

Mobile Truncation Management:

Preview text displays differently by device:

Best Practice: Structure preview text so the first 40 characters form a complete thought, with characters 41+ providing bonus context.

Example:

- First 40 chars: "30% off everything—shop now before midnight"

- Chars 41-90: " Free shipping + returns on all orders over $50"

Desktop users get full context; mobile users still see complete value proposition.

Tactic 4: Time-of-Day Optimization

Subject line performance varies by send time.

Morning sends (6-9 AM): Recipients scan subject lines quickly during commute. Short, high-impact subject lines perform better.

Midday sends (11 AM-2 PM): Inbox is crowded. Curiosity-driven subject lines stand out.

Evening sends (6-9 PM): Recipients have more time. Longer, detailed subject lines work.

Test the same subject line variants at different send times to identify time-specific winners.

Comprehensive Send Time Analysis:

Day-of-Week Performance Variance:

Subject line patterns that work Tuesday may fail Sunday:

Implementation Strategy:

Create a send-time optimization matrix:

IF send_time = "6-8 AM" AND day = "Monday-Friday"

THEN use: Ultra-short, urgent subject lines

IF send_time = "7-10 PM" AND day = "Saturday-Sunday"

THEN use: Longer, storytelling subject lines

IF send_time = "11 AM-1 PM" AND day = "Tuesday-Thursday"

THEN use: Curiosity-driven, professional subject lines

This dynamic approach can lift open rates 12-20% beyond static subject line strategies.

Tactic 5: AI Feedback Loop

Feed performance data back into GPT to generate progressively better variants.

Round 1: Generate 50 variants, test top 10.

Round 2 Prompt:

Previous test results:

Variant A: "30% Off Ends Tonight—Gear Up Now" | Open rate: 24.3%

Variant B: "[Name], 24 Hours Only: 30% Off Activewear" | Open rate: 28.1% [WINNER]

Variant C: "Flash Sale: Save 30% Before Midnight" | Open rate: 21.7%

Variant D: "Your 24-Hour Window: 30% Off Everything" | Open rate: 23.5%

Variant E: "Don't Miss This: 30% Off Ends Tomorrow" | Open rate: 19.2%

Analyze why Variant B outperformed others. Generate 10 new subject lines that:

1. Incorporate the winning elements (personalization, urgency, specificity)

2. Introduce 1-2 new variations to test refinements

3. Avoid the underperforming patterns from Variants C and E

GPT-4 will identify patterns (personalization + specificity + urgency) and iterate on successful formulas.

Systematic Iteration Framework:

After five rounds of iteration, you'll have evolved from generic patterns to highly optimized, audience-specific formulas that consistently outperform baseline by 35-60%.

Machine Learning Pattern Recognition:

For advanced users with coding skills, implement a lightweight ML feedback system:

\# Pseudocode for pattern learning

import pandas as pd

from sklearn.linear_model import LinearRegression

\# Load historical test data

data = pd.read_csv('subject_line_tests.csv')

\# Feature engineering

data['has_personalization'] = data['subject'].str.contains('[Name]').astype(int)

data['has_emoji'] = data['subject'].str.contains('🎉|🔥|💥').astype(int)

data['has_urgency'] = data['subject'].str.contains('hours|today|now|midnight').astype(int)

data['char_count'] = data['subject'].str.len()

data['has_number'] = data['subject'].str.contains('\d').astype(int)

\# Train model

features = ['has_personalization', 'has_emoji', 'has_urgency', 'char_count', 'has_number']

X = data[features]

y = data['open_rate']

model = LinearRegression()

model.fit(X, y)

\# Generate predictions for new variants

new_variant = pd.DataFrame({

'has_personalization': [1],

'has_emoji': [1],

'has_urgency': [1],

'char_count': [45],

'has_number': [0]

})

predicted_open_rate = model.predict(new_variant)

print(f"Predicted open rate: {predicted_open_rate[0]:.1%}")

This allows you to predict performance before testing, prioritizing variants with highest predicted impact.

Common Pitfalls and How to Avoid Them

Pitfall 1: Testing Without Statistical Significance

Problem: You test 5 variants on a 5,000-person list (1,000 per variant). Variant A gets 22% open rate, Variant B gets 24%. You declare B the winner, but the difference is noise.

Solution: Use a sample size calculator before testing. For small lists, test fewer variants to maintain statistical power. A 2-variant test with 2,500 people each is stronger than a 5-variant test with 1,000 each.

Statistical Significance Decision Matrix:

Use this rule of thumb:

- Difference < 2 percentage points: Need 5,000+ per variant for confidence

- Difference 2-5 percentage points: Need 2,000+ per variant

- Difference > 5 percentage points: Can be confident with 1,000+ per variant

Pitfall 2: Overfitting to Outliers

Problem: One curiosity-driven subject line ("You Won't Believe What We Just Did") gets 35% open rate—2x your average. You assume curiosity is the answer and use it every campaign. Performance regresses to 18%.

Solution: Outliers happen. Validate patterns across multiple campaigns. A pattern isn't "real" until it wins 3+ times across different contexts.

Outlier Detection Framework:

Real Example—The "You Won't Believe" Trap:

A home goods e-commerce brand tested "You won't believe what we just added" for a new product announcement. It achieved 34.8% open rate—their highest ever.

They used curiosity-driven subject lines for the next 6 campaigns:

- Campaign 2: 28.4% (still strong)

- Campaign 3: 22.7% (declining)

- Campaign 4: 19.2% (below previous baseline)

- Campaign 5: 16.8% (significantly worse)

- Campaign 6: 15.1% (audience fatigue)

Root cause: Curiosity without delivery creates trust erosion. The first email delivered genuine surprise. Subsequent emails over-promised, leading to disengagement.

Solution: Reserve curiosity-driven subject lines for genuinely novel moments (major launches, significant announcements). Use more transparent value propositions for regular campaigns.

Pitfall 3: Ignoring Downstream Metrics

Problem: Clickbait subject lines increase open rates but crater click-through rates. Opens spike to 32%, but clicks drop from 4% to 1.2%. Your revenue decreases despite "better" subject lines.

Solution: Optimize for click rate or conversion rate, not open rate alone. A 20% open rate with 5% CTR (1% of list clicks) beats a 30% open rate with 2% CTR (0.6% of list clicks).

Full-Funnel Performance Comparison:

Subject Line B wins on vanity metrics (opens) but loses on business metrics (revenue, engagement quality).

Optimization Priority Hierarchy:

- Revenue per send (ultimate business metric)

- Conversion rate (indicates message match quality)

- Click-through rate (shows engagement depth)

- Open rate (first-step engagement)

- List growth rate (unsubs vs. new subscribers)

Dashboard Recommendation:

Create a composite score that weights multiple metrics:

Email Performance Score =

(Open Rate × 0.2) +

(CTR × 0.3) +

(Conversion Rate × 0.4) +

((1 - Unsub Rate) × 0.1)

This prevents over-optimization on any single metric while keeping focus on business outcomes.

Pitfall 4: Neglecting Mobile Rendering

Problem: Your winning subject line is "Exclusive Member Benefit: Premium Access to New Collection Available Now." On mobile (where 60% of emails open), it truncates to "Exclusive Member Benefit: Premiu..." The context is lost.

Solution: Preview subject lines in mobile view. Keep high-impact words in the first 30 characters. Test short variants specifically for mobile-heavy lists.

Mobile Truncation Testing Checklist:

Front-Loading Strategy:

Structure subject lines so critical information appears first:

The front-loaded versions communicate value even when truncated.

Mobile-Specific Subject Line Testing:

Pitfall 5: Spam Filter Triggers

Problem: Aggressive urgency-driven subject lines ("ACT NOW! LIMITED TIME! BUY TODAY!") trigger spam filters. Your open rate drops to 3% because 70% of sends land in spam folders.

Solution: Use spam checker tools (Mail-Tester, GlockApps) before sending. Avoid:

- All caps words

- Multiple exclamation marks

- Phrases like "free money," "urgent action required," "click here now"

- Excessive punctuation (!!!, ???)

Comprehensive Spam Trigger Avoidance Guide:

Spam Score Thresholds:

Pre-Send Spam Check Workflow:

- Generate subject line variants

- Score all variants with Mail-Tester

- Eliminate any scoring below 7/10

- Send top performers to small test segment (500-1000)

- Check inbox placement with GlockApps

- If placement >95%, proceed with full send

- If placement <95%, revise and retest

Real-World Example—Spam Filter Recovery:

An e-commerce brand's "FINAL HOURS!!! BUY NOW OR MISS OUT!!!" subject line had:

- SpamAssassin score: 6.2 (high risk)

- Inbox placement: 28% (72% went to spam)

- Effective open rate: 4.1% (mostly Gmail promotions tab)

Revised to: "Final hours: 30% off ends at midnight"

- SpamAssassin score: 1.1 (low risk)

- Inbox placement: 96%

- Effective open rate: 26.8%

The revision maintained urgency while avoiding trigger patterns—6.5x improvement in actual reach.

Implementation Roadmap: First 60 Days

Days 1-7: Foundation

- Audit last 100 campaigns, document patterns

- Set up testing framework and spreadsheet

- Identify pattern categories to test

- Generate first batch of 50 variants for upcoming campaign

Days 8-21: First Testing Sprint (3 campaigns)

- Test 5 pattern categories across 3 campaigns

- Deploy, measure, document results

- Identify early pattern winners

Days 22-35: Refinement Sprint (3 campaigns)

- Generate variants based on early winners

- Test refinements and iterations

- Begin audience segmentation testing

Days 36-49: Optimization Sprint (3 campaigns)

- Implement best-performing patterns as defaults

- Test advanced tactics (emoji, preview text synergy)

- Conduct time-of-day optimization tests

Days 50-60: Analysis and Scaling

- Aggregate data across all tests

- Calculate pattern-level performance metrics

- Document winning formulas in brand playbook

- Train team on repeatable process

By day 60, you'll have:

- Tested 9 campaigns with 45 variants total

- Identified 2-3 high-confidence winning patterns

- Improved open rates by 15-35% on average

- Built a repeatable testing process

Detailed Week-by-Week Implementation Plan:

Total time investment: 28-38 hours over 60 days (average 4-6 hours per week)

Post-Implementation Maintenance:

Once established, subject line testing requires minimal ongoing time:

- Per campaign: 30-45 minutes (variant generation + deployment)

- Monthly review: 1-2 hours (pattern analysis + playbook updates)

- Quarterly optimization: 2-4 hours (segment refinement + new pattern testing)

ROI Calculation: Time vs. Revenue Impact

Time Investment:

- Initial setup and audit: 4 hours

- Per-campaign generation: 30 minutes

- Per-campaign deployment: 15 minutes

- Per-campaign analysis: 15 minutes

- Total per campaign: 60 minutes

Financial Return (Example: 50k List, $100 AOV, 2 Campaigns/Week):

Baseline performance:

- 50,000 subscribers

- 18% open rate (9,000 opens)

- 4% click rate (360 clicks)

- 3% conversion rate (11 purchases)

- Revenue per campaign: $1,100

After optimization (25% open rate, 5% CTR, 3% conversion):

- 50,000 subscribers

- 25% open rate (12,500 opens)

- 5% click rate (625 clicks)

- 3% conversion rate (19 purchases)

- Revenue per campaign: $1,900

Incremental revenue per campaign: $800

Campaigns per year: 104 (2/week)

Annual incremental revenue: $83,200

Time investment per year: 104 hours (1 hour per campaign)

ROI: $800/hour of testing time.

Even a conservative 10% open rate improvement delivers substantial returns.

ROI Across Different List Sizes:

Key insight: ROI scales linearly with list size. The larger your list, the more valuable each percentage point of improvement becomes.

Break-Even Analysis:

Even pessimistic scenarios achieve break-even within a month. Most implementations see positive ROI after 2-4 campaigns.

Tools and Resources

AI Generation:

- ChatGPT (GPT-4): Primary variant generation

- Claude (Anthropic): Alternative for brand voice alignment

- Copy.ai: Pre-built email subject line templates

Email Platforms with Native A/B Testing:

- Klaviyo: Up to 10 variants

- Mailchimp: Up to 3 variants (standard), 8 (premium)

- HubSpot: Up to 5 variants

- ActiveCampaign: Up to 5 variants

- ConvertKit: Up to 3 variants

Testing and Analytics:

- Evan Miller's A/B Test Calculator: Sample size and significance

- Optimizely Stats Engine: Bayesian significance testing

- Litmus Email Analytics: Preview rendering across clients

- Mail-Tester: Spam score checking

Documentation:

- Google Sheets: Testing log template

- Notion: Campaign performance database

- Airtable: Pattern library with filterable views

Additional Resources:

Recommended Tech Stack by Company Size:

Next Steps in Your Experimentation Journey

Subject line testing is one lever in the rapid experimentation toolkit. Apply similar frameworks to:

- Product Description Testing: Use AI to generate and test product page copy variants

- AI-Assisted Competitive Analysis: Systematically analyze what subject lines competitors use, identify gaps

- 48-Hour Testing Workflow: Apply these principles to landing page headline testing

Expanding the Framework to Other Marketing Channels:

The systematic testing methodology transfers directly to any text-based marketing asset.

Most email marketers test the same two subject lines every campaign. They improve incrementally—5% better open rates year-over-year.

You can improve 30% in 30 days by expanding your testing surface area. AI makes this economically viable. Every campaign becomes a learning opportunity. Every test builds your pattern library. Every win compounds.

Your competitors are still debating whether "Sale" or "Discount" works better. You're testing 10 variations of both and moving to the next experiment.

Ready to 3x your email open rates? Our Email & SMS Marketing services implement systematic testing frameworks for email programs that need to scale performance without scaling headcount. We handle the AI prompt engineering, statistical analysis, and documentation—you focus on strategy and creative direction. Schedule a consultation to discuss your email optimization roadmap.

Ready to Transform Your Growth Strategy?

Let's discuss how AI-powered marketing can accelerate your results.