Most teams make the same mistakes twice. Sometimes three times. Sometimes indefinitely.

A project goes sideways. Lessons are discussed. Notes are taken. Everyone moves on. Six months later, the same mistake happens again. The notes exist somewhere, but nobody reads them. The lessons live in someone's head, but that person left.

Institutional knowledge is fragile. It degrades over time. It leaves when people leave. It hides in documents nobody finds.

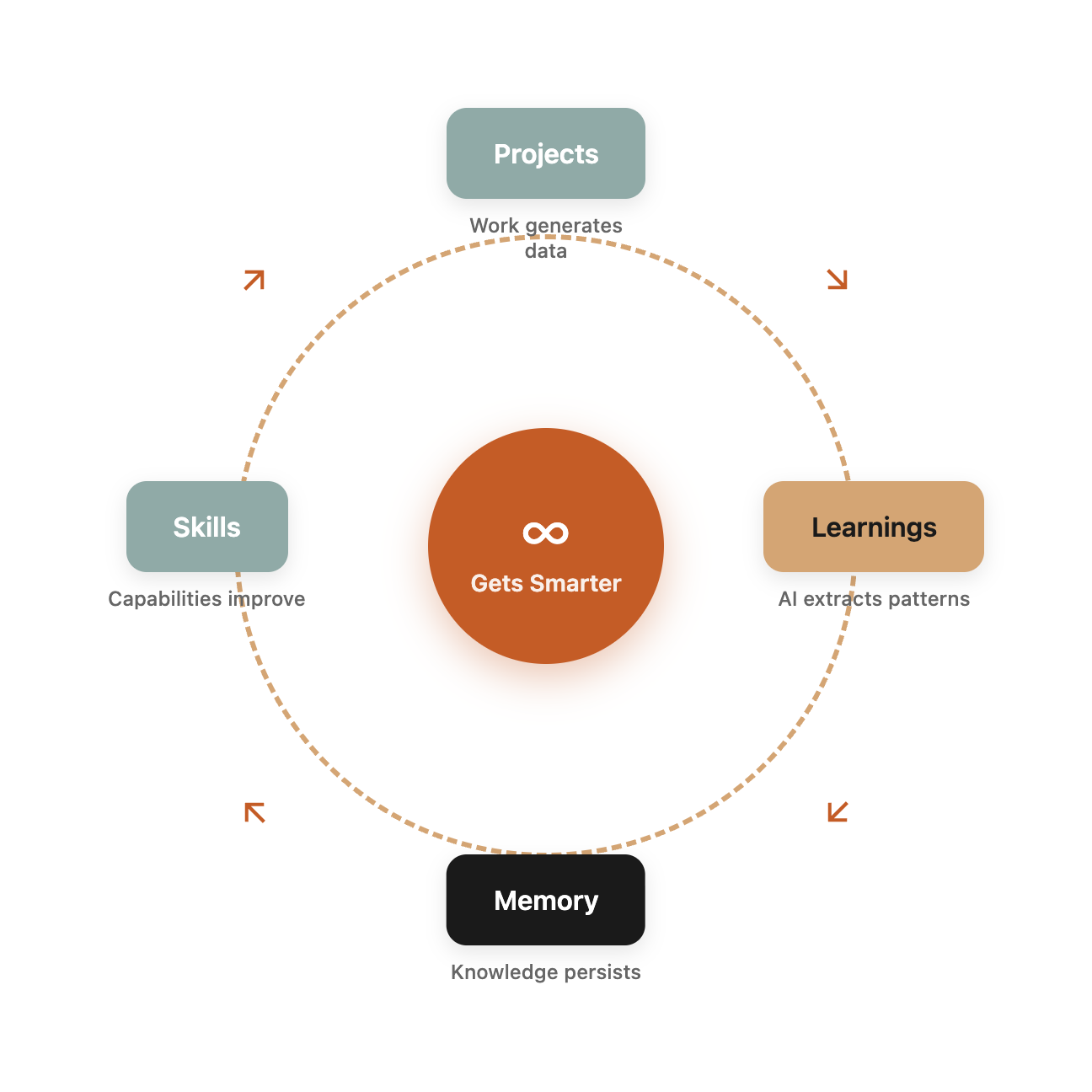

We built a system where every project teaches the AI something that makes the next project better. The system gets smarter with use—not just on individual tasks, but on patterns that repeat across clients and campaigns.

Here's how continuous improvement actually works when AI is doing the improving.

The Traditional Knowledge Management Failure

Let's be honest about how "learning" typically works in organizations:

Post-mortems happen: Someone writes a document after a project. It covers what went wrong, what went right, and what to do differently. It goes in a shared drive. It's never opened again.

Best practices documents exist: Someone maintains a wiki or playbook. It's comprehensive when created. It's outdated within months. New team members don't know it exists.

Tribal knowledge accumulates: Experienced team members know things: which clients are sensitive about what, which approaches work for which industries, which shortcuts cause problems. This knowledge walks out when they do.

Mistakes repeat: Despite all this documentation, the same issues arise. Not because people are careless, but because knowledge isn't surfaced at the moment of decision.

The failure isn't intent—it's architecture. Knowledge stored passively (in documents) doesn't reach decisions actively (in the moment).

The AI-Powered Alternative

Our system embeds learnings directly into the AI workflows that execute the work. Knowledge doesn't wait to be retrieved—it's present when decisions happen.

Level 1: Memory Systems

AI memory persists across conversations. When working with a client, the system knows:

Client context:

- Industry and competitive landscape

- Brand voice and style preferences

- Historical performance data

- Past issues and resolutions

- Stakeholder preferences and sensitivities

Project history:

- What approaches worked before

- What approaches failed before

- Specific feedback received

- Performance benchmarks achieved

This context doesn't require manual lookup. It's automatically available when the client comes up.

Example: Starting a new content brief for Client X, the system knows "Client X prefers data-driven content with specific statistics, dislikes jargon, and has asked to avoid comparison to Competitor Y in past projects."

Level 2: Skill Evolution

Skills—the modular capabilities that handle specific workflows—evolve based on usage.

Pattern detection: After running 100 SEO audits, the system identifies patterns:

- Technical issues that commonly correlate

- Red flags that predict larger problems

- Recommendations that clients typically implement vs ignore

- Edge cases that required manual handling

Methodology refinement: Each skill has documented methodology. As edge cases arise, methodology updates:

Before: "Check for duplicate title tags" After: "Check for duplicate title tags. Note: e-commerce sites commonly have product variants with near-duplicate titles—flag these separately from true duplicates and recommend SKU differentiation strategy."

Example library growth: Skills include examples of good inputs and outputs. As new examples arise, the library grows:

"Here's how the content brief skill handled a highly regulated industry..." "Here's how the CRO skill handled a site with accessibility requirements..."

Level 3: Cross-Client Pattern Recognition

Individual client memory is valuable. Cross-client pattern recognition is transformational.

Industry patterns: "B2B SaaS companies consistently see highest conversion improvement from pricing page trust signals. E-commerce companies consistently see highest improvement from checkout friction reduction."

Seasonal patterns: "Outdoor education organizations see traffic peaks in January (New Year planning) and September (school year planning). Content strategies should anticipate these windows."

Failure patterns: "Clients who skip the research phase of content strategy consistently underperform on content ROI. Recommend emphasizing research investment in proposals."

These patterns emerge from data across many clients, not visible to any individual project.

How Learning Actually Happens

Let me walk through the mechanics.

Trigger: Project Completion

When a project completes, the system captures:

What worked:

- Which approaches produced results

- Which recommendations got implemented

- What the measurable outcomes were

What didn't work:

- Where issues arose

- What required iteration

- What the client pushed back on

What was unexpected:

- Edge cases not covered by existing methodology

- Client-specific requirements not anticipated

- Performance that exceeded or underperformed expectations

Analysis: Pattern Identification

AI analyzes captured data looking for patterns:

Individual project learning: "This project required extra attention to mobile performance because the client's audience skews 70% mobile. Future projects for mobile-heavy audiences should prioritize mobile optimization earlier in scope."

Cross-project patterns: "Three of the last five e-commerce audits have identified the same Shopify app as causing page speed issues. Add to standard audit checklist as known issue."

Contradiction detection: "Previous learning suggested approach X for situation Y. This project tried approach X for situation Y and it failed. Investigate why—was the situation actually different, or is the learning wrong?"

Integration: Memory and Skill Updates

Learnings integrate into the system:

Memory updates: Client-specific learnings add to client context. Next time that client comes up, relevant history surfaces automatically.

Skill updates: Methodology improvements propagate to skill documentation. Every future use of that skill benefits from the learning.

Pattern library updates: Cross-client patterns add to the knowledge base. Future similar situations get flagged with relevant patterns.

Verification: Human Review

Not all learnings are correct. Human review validates:

Quality check:

- Is this learning accurate?

- Is this generalizable or project-specific?

- Does this contradict existing knowledge for good reason?

Integration decision:

- Should this update memory, skills, or both?

- Who else should know about this learning?

- Are there downstream implications?

Human review takes 10-15 minutes per project. The leverage is enormous: one review improves all future executions.

What This Looks Like in Practice

Example 1: SEO Audit Evolution

Month 1: First SEO audit for a healthcare client

- Standard methodology applied

- Issue: HIPAA-related concerns required different privacy recommendations

- Learning captured: Healthcare clients need modified privacy/compliance section

Month 3: Second healthcare client

- System flags healthcare context automatically

- Modified compliance recommendations included by default

- Client feedback: "This is exactly what we needed"

Month 6: Healthcare audit skill variant created

- Specialized methodology for healthcare sites

- Compliance checklist built in

- Common healthcare-specific issues pre-identified

One project's edge case became systematic capability. For more on how our SEO audits work, see AI-powered SEO audits: from 40 hours to 4.

Example 2: Content Brief Refinement

Version 1: Content briefs include keyword data and competitive analysis Learning: Clients who implemented briefs without internal linking guidance had 40% worse outcomes

Version 2: Content briefs include internal linking recommendations Learning: Writers struggled with brief format—too dense, hard to follow

Version 3: Briefs reformatted with clearer sections and visual hierarchy Learning: Briefs for technical topics need more context than general topics

Version 4: Topic-type detection triggers different brief depth levels

Each version incorporates learnings from real usage. See our detailed guide on AI-powered content brief generation.

Example 3: Cross-Client Pattern Discovery

Individual observations:

- Client A (outdoor education): January traffic spike

- Client B (outdoor recreation): January traffic spike

- Client C (camping gear): January traffic spike

Pattern identified: "Outdoor industry sees consistent January planning-season spike. Content strategies should have fresh content published in December to rank before the spike."

Application: All outdoor industry clients get Q4 content planning recommendations. Not because anyone asked—because the system learned.

Building Your Improvement System

You can implement continuous improvement without custom infrastructure.

Minimum Viable Version

After each project, document:

- One thing that worked better than expected

- One thing that worked worse than expected

- One thing you'd do differently

Store in searchable format:

- Simple note in project folder

- Tag with client, project type, and themes

- Make it findable

Review before similar projects:

- Search for relevant past learnings

- Apply insights to current planning

- Update learnings based on new outcomes

This takes 10 minutes per project and 5 minutes before each new project.

Intermediate Version

Structured capture: Create a template for project learnings:

- Project type

- Client industry

- What worked

- What didn't

- Recommendations for future

- Tags/categories

Regular synthesis: Monthly, review learnings across projects:

- What patterns repeat?

- What methodology should update?

- What should become standard practice?

Integration: Update templates, checklists, and processes based on synthesis.

Advanced Version (What We Built)

Automated capture: System prompts for learnings at project close Learnings integrate into client memory automatically

AI analysis: Pattern recognition across projects Anomaly detection (contradictions, gaps) Suggested methodology updates

Skill evolution: Skills version-controlled Changes tracked with reasoning Rollback possible if updates don't work

Verification workflow: Human review of suggested changes Approval gates before integration Impact tracking after integration

The Compound Effect

Here's why this matters strategically:

Learning curve disappears: New team members benefit from all accumulated learning immediately. They don't need years of experience—they need access to the system.

Mistakes stop repeating: Known issues get caught by updated methodology. Edge cases become standard handling.

Quality improves automatically: Each project is better than the last, not because of heroic effort, but because the system improved.

Competitive moat builds: Accumulated learning is proprietary. Competitors can copy your current process, but they can't copy your history of improvement.

After 500 projects, the system knows more about executing marketing work than any individual could. That knowledge advantage compounds. This is the foundation of our AI-amplified marketing approach.

Start Today

If you're not capturing learnings systematically, start now:

This week:

- After your next completed project, spend 10 minutes documenting learnings

- Create a simple template for consistent capture

- Store somewhere searchable

This month:

- Review learnings from last 5 projects

- Identify one pattern across projects

- Update one template or process based on the pattern

This quarter:

- Establish learning capture as standard practice

- Create monthly synthesis routine

- Track how often learnings prevent repeated issues

Let Us Accelerate Your Learning

Want to implement systematic improvement without building infrastructure? We can help.

What we offer:

- Learning capture methodology design

- AI-assisted pattern analysis

- Skill and process documentation

- Continuous improvement workflow implementation

Want to enable AI-powered learning systems across your team? Explore our AI Enablement program to build organizational AI capabilities that compound over time.

Investment: Starting at $3,000 for improvement system design.

Contact us:

- Email: hello@wedoworldwide.com

- Website: wedoworldwide.com

Tell us about your biggest repeated mistakes. We'll suggest how to make them stop.

About the Author: Mike McKearin is the founder of WE-DO Growth Agency. His team has captured learnings from 1,000+ client projects, building institutional knowledge that makes every new project benefit from everything that came before.