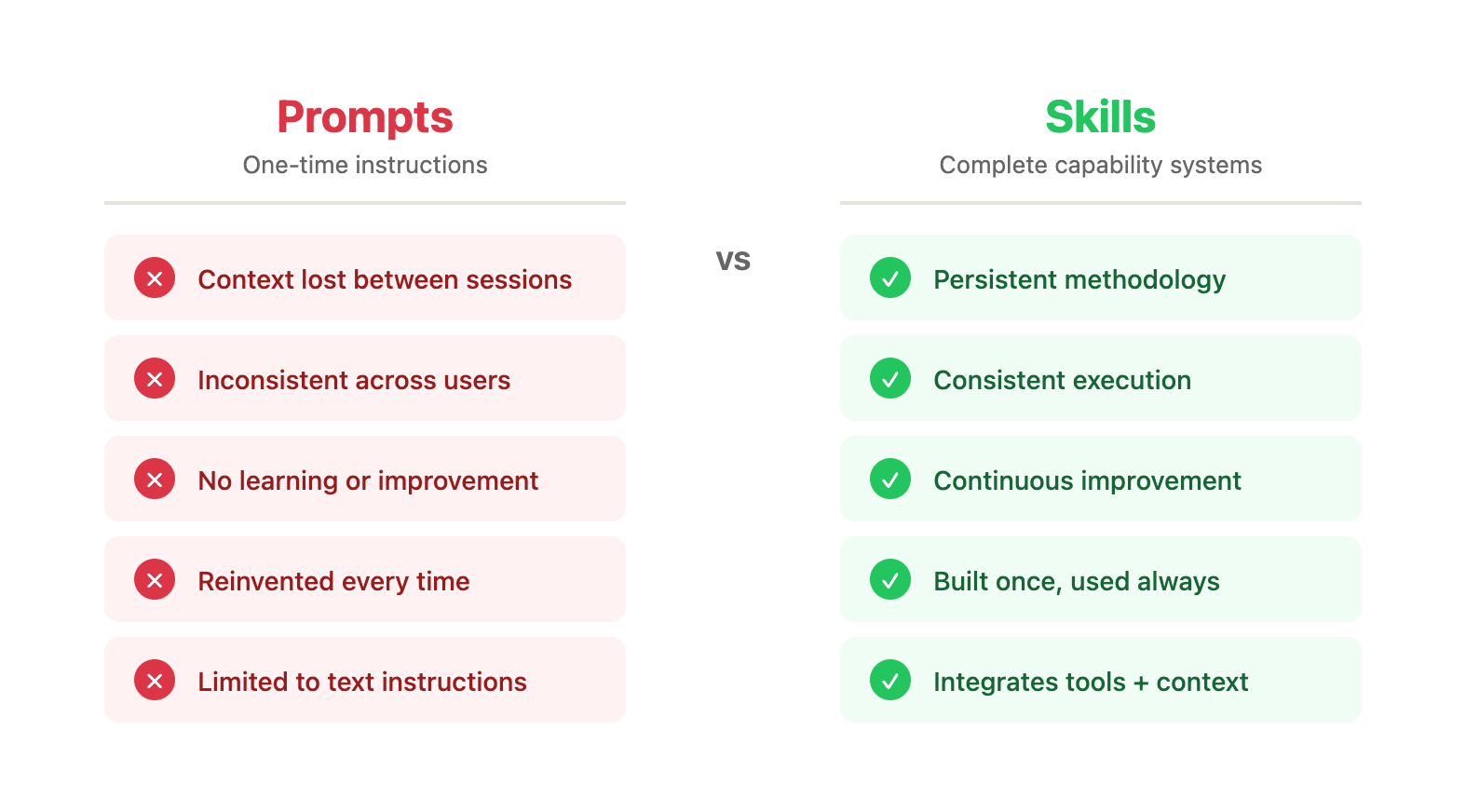

Most companies using AI are doing it wrong.

They copy-paste prompts into ChatGPT. They lose context between sessions. They reinvent solutions to problems they've solved before. Every interaction starts from zero.

That's not AI amplification—it's AI assistance. It helps with individual tasks but doesn't build toward anything. The tenth time you need an SEO audit prompt, you're no better off than the first.

We took a different approach: building skills instead of prompts. Modular capabilities that encode methodology, learn from usage, and compound over time.

The difference isn't just terminology. It's the difference between a tool and a system.

Prompts vs Skills: The Fundamental Distinction

A prompt is a set of instructions for a specific task:

"Analyze this website's SEO and provide recommendations. Consider technical factors, content quality, and backlink profile. Format as a prioritized list with impact scores."

A skill is a complete capability system:

SEO Audit Skill

├── Methodology

│ ├── Data sources to integrate

│ ├── Analysis framework

│ ├── Prioritization criteria

│ └── Output formats

├── Context

│ ├── Industry benchmarks

│ ├── Tool-specific knowledge

│ ├── Common issues library

│ └── Client history access

├── Tools

│ ├── Screaming Frog integration

│ ├── GSC API connection

│ ├── GA4 API connection

│ └── Competitive data API

├── Examples

│ ├── Sample audits by industry

│ ├── Edge case handling

│ └── Client feedback history

└── Improvement Loop

├── Outcome tracking

├── Pattern recognition

└── Methodology updates

The prompt handles one task. The skill handles the capability—including everything needed to do it well, consistently, and better over time.

Why Prompts Fail at Scale

Prompt-based AI workflows break down as usage increases:

Problem 1: Context Limits

Prompts can only include so much context. As complexity grows, you hit limits:

- Can't include entire methodology

- Can't reference past examples

- Can't access external data

- Can't remember previous interactions

Every session starts fresh. Prior learning doesn't compound.

Problem 2: Inconsistency

Different people write different prompts. Same task, different execution:

- Junior team member's prompt misses key elements

- Senior team member's prompt includes too much, creating confusion

- Results vary by who's asking, not what's needed

Quality depends on prompt quality, which depends on person, mood, and time available.

Problem 3: No Improvement

Prompts don't learn. You can refine them manually, but:

- Refinements live in someone's notes

- Different versions proliferate

- No systematic capture of what works

- No cross-team learning

The 100th time you use a prompt, it's no better than the first.

Problem 4: Institutional Fragility

When prompt knowledge lives in people's heads:

- Key person leaves, knowledge leaves

- Training new people requires teaching prompts

- Best practices spread inconsistently

- Tribal knowledge dominates

The organization's AI capability depends on specific people, not systems.

The Skill Architecture

Our skill-based system solves these problems through architecture.

Layer 1: Progressive Loading

Skills load context progressively—only what's needed, when needed:

Level 0 - Always loaded:

- Basic system instructions

- User preferences

- Current date/context

Level 1 - On skill invocation:

- Skill methodology

- Core instructions

- Essential context

Level 2 - On demand:

- Reference materials

- Examples library

- Historical data

Level 3 - As needed:

- Client-specific context

- Industry benchmarks

- Relevant past projects

This keeps context focused while making everything available.

Layer 2: Modular Capabilities

Skills compose from smaller components:

Data components:

- API connections

- File access

- Database queries

Processing components:

- Analysis frameworks

- Prioritization logic

- Pattern recognition

Output components:

- Formatting templates

- Export formats

- Integration targets

Mix and match components to create new skills rapidly.

Layer 3: Memory Integration

Skills access and update memory:

Read from memory:

- Client history

- Past project learnings

- User preferences

- Organizational knowledge

Write to memory:

- Project outcomes

- New learnings

- Pattern observations

- Updated context

Memory persists across sessions. Learning accumulates.

Layer 4: Improvement Loops

Skills improve through structured feedback:

Outcome tracking: After execution, capture:

- Did it work?

- What was the result?

- What required adjustment?

Pattern analysis: Across executions, identify:

- What correlates with success?

- What correlates with failure?

- What exceptions exist?

Methodology updates: Based on patterns, update:

- Default approaches

- Edge case handling

- Quality thresholds

Skills get better with use, automatically. For more on how this works, see our guide on the continuous improvement learning system.

Building Skills: The Practical Process

Here's how we create new skills.

Step 1: Define the Capability

What should this skill do?

Good skill definition: "Generate comprehensive SEO content briefs from a target keyword, including search intent analysis, competitive content review, recommended structure, and SEO specifications."

Bad skill definition: "Help with content." (Too vague—what kind of help? What format? What quality?)

Skills need clear scope: what they do, what they don't do, what inputs they need, what outputs they produce.

Step 2: Document the Methodology

How should the skill execute?

Methodology includes:

- Step-by-step process

- Decision criteria at each step

- Quality standards

- Edge case handling

- Failure modes and recovery

Write this as if training a new team member who needs to execute independently.

Step 3: Gather Context

What knowledge does the skill need?

Context types:

- Domain knowledge (SEO best practices, industry standards)

- Tool knowledge (how to use specific platforms/APIs)

- Organizational knowledge (brand guidelines, client preferences)

- Historical knowledge (past examples, learned patterns)

Organize context so relevant pieces load when needed.

Step 4: Create Examples

What does good execution look like?

Example components:

- Input (what the skill received)

- Process (key decisions made)

- Output (what the skill produced)

- Outcome (how it performed)

Include edge cases and failure examples with lessons learned.

Step 5: Define Improvement Triggers

How does the skill learn?

Trigger types:

- After every execution (basic outcome capture)

- On exception/error (what went wrong?)

- On explicit feedback (human evaluation)

- Periodically (pattern analysis across executions)

Each trigger specifies what to capture and how to integrate learnings.

Step 6: Test and Iterate

Deploy skill on real work:

- Does it handle typical cases well?

- Does it handle edge cases acceptably?

- Does the output meet quality standards?

- Does improvement actually happen?

Iterate based on real usage, not theoretical completeness.

Skills in Practice: Examples

Example: Content Brief Skill

Invocation: "Create a content brief for the keyword 'project management software for small teams'"

What the skill does:

-

Keyword analysis:

- Query keyword database for volume, difficulty, intent

- Expand to related keywords and questions

- Classify search intent

-

Competitive analysis:

- Pull current top 10 ranking pages

- Extract word counts, topics covered, formats used

- Identify content gaps

-

Structure generation:

- Recommend sections based on competitive analysis and intent

- Specify word counts per section

- Note featured snippet opportunities

-

SEO specifications:

- Generate meta title and description

- Recommend URL structure

- Identify internal linking opportunities

-

Quality check:

- Verify all required elements included

- Check against past successful briefs

- Flag any anomalies for human review

Output: Complete, ready-to-use content brief. See our detailed guide on AI-powered content brief generation.

Improvement: After content is written and performs, capture:

- How closely did writer follow brief?

- How did content perform vs projections?

- What would have improved the brief?

Example: CRO Experiment Skill

Invocation: "Create an A/B test variant for this landing page focusing on trust signals"

What the skill does:

-

Page capture:

- Create single-file HTML snapshot

- Extract brand variables (colors, typography, spacing)

-

Analysis:

- Identify current trust elements

- Note gaps vs best practices

- Review past client experiments if available

-

Variant creation:

- Generate trust element additions

- Apply using extracted brand variables

- Ensure defensive CSS for deployment

-

Documentation:

- Create hypothesis statement

- Define success metrics

- Specify test configuration

-

Deployment guidance:

- Generate page builder-ready code

- Include deployment checklist

- Note cache-clearing requirements

Output: Complete variant HTML + documentation. Learn more about our rapid CRO experimentation process.

Improvement: After test completes, capture:

- Did hypothesis hold?

- What was lift (or loss)?

- What explains the outcome?

The Strategic Advantage

Skills create defensible competitive advantages:

1. Execution Speed

Skills execute in minutes what manual processes take hours. Not because AI is magic, but because the methodology, context, and tools are already assembled.

2. Quality Consistency

Every execution follows the same methodology. Quality doesn't depend on who's doing the work or how much time they have.

3. Knowledge Accumulation

Every project teaches the skill something. After 500 executions, the skill embodies 500 projects worth of learning.

4. Difficult to Replicate

Competitors can copy your current prompts. They can't copy your accumulated skill learning. They can't copy your client-specific context. They can't copy your 500-project history. This is the core principle behind our AI-amplified marketing approach.

5. Team Independence

Skills encode expertise. New team members can leverage skills immediately. Key person risk decreases. Organizational capability stabilizes.

Building Your Skill System

You can start building skills without infrastructure.

Minimum Viable Skills

Document your best prompts:

- What's the prompt?

- What context does it need?

- What makes it work vs fail?

Create methodology notes:

- Step-by-step process

- Decision criteria

- Common issues

Collect examples:

- Good inputs and outputs

- Edge cases with handling

- Failure cases with lessons

Store somewhere findable:

- Notion, Confluence, Google Docs

- Organized by capability

- Searchable and browsable

Intermediate System

Structured skill documents:

- Standard template for all skills

- Clear sections: methodology, context, examples, improvement

- Version tracking

Manual improvement loop:

- After significant projects, update relevant skills

- Quarterly review: what patterns emerged?

- Annual audit: what's outdated?

Advanced System (What We Built)

Programmatic skills:

- Skills as code/configuration

- Automated context loading

- API integrations built in

Systematic improvement:

- Outcome tracking automated

- Pattern analysis AI-assisted

- Updates proposed automatically

Full integration:

- Skills invoke from any interface

- Memory persistence across sessions

- Cross-skill knowledge sharing

Start Building Skills Today

If you're using AI with prompts, start evolving toward skills:

This week:

- Identify your most-used AI task

- Document the prompt and methodology

- Create a "skill doc" even if simple

This month:

- Add examples to your skill docs

- Capture context needed for each skill

- Track outcomes to identify improvement opportunities

This quarter:

- Build skill library covering core tasks

- Establish improvement cadence

- Measure: are skills actually getting better?

Learn More

Interested in building skill-based AI systems? We've learned a lot building ours.

What we can share:

- Skill design patterns that work

- Improvement loop architectures

- Common failure modes and solutions

- Integration approaches for different tech stacks

Ready to build skill-based AI systems for your marketing operations? Learn more about our AI Integration services.

Contact us:

- Email: hello@wedoworldwide.com

- Website: wedoworldwide.com

Tell us about your AI workflow challenges. We'll share what we've learned that might help.

About the Author: Mike McKearin is the founder of WE-DO Growth Agency. Over three years, his team built a skill-based AI system with 40+ specialized skills that collectively execute work that would require 10x the team if done manually. He believes skills are the architecture that makes AI amplification actually work.